What is Natural Language Processing?

Natural Language Processing (NLP) is a field of machine learning and linguistics focused on the interaction between computers and human languages.

It involves processing, analyzing, and generating natural language data. NLP is used in performing various tasks, including but not limited to the following:

Text Summarization: Creating concise summaries of long documents while retaining key information. This is particularly useful in news aggregation, legal document analysis, and academic research.

Text Classification: This involves categorizing text into predefined classes or categories. Applications include spam detection, sentiment classification, and tagging customer inquiries

Speech Recognition: Converting spoken language into written text, enabling applications like virtual assistants (e.g., Siri, Alexa), transcription services, and voice-activated control systems.

Sentiment Analysis: Determining the emotional tone behind a series of words to understand the attitudes, opinions, and emotions expressed within the text. This is widely used in social media monitoring, customer feedback analysis, and market research.

Machine Translation: Automatically converting text from one language to another while maintaining the original meaning. Popular applications include translation services like Google Translate and multilingual customer service chatbots.

NLP isn’t limited to just these tasks. It can also solve more complex challenges such as named entity recognition (NER), part-of-speech tagging, topic modeling, and making applications more intuitive and efficient. To tackle these different NLP challenges, Hugging Face transformers provide a robust and stable solution with state-of-the-art models and resources for various NLP applications.

Understanding Hugging Face Transformers

Transformer models solve all kinds of NLP tasks, including those mentioned above. Hugging Face Transformers is an open-source library that has changed the field of natural language processing by providing access to pre-trained transformer models. These models are designed to handle various NLP tasks, from text classification to complex challenges like machine translation or named entity recognition.

What is a Transformer Model?

A transformer model is a type of deep learning model introduced in a groundbreaking paper, Attention is all you need by Ashish Vaswani, a team at Google Brain, and a group from the University of Toronto in 2017.

Unlike previous models, transformers use self-attention, which allows them to weigh the importance of different words in a sentence and better understand the context.

This makes them highly effective for processing and generating natural language data. These models are capable of translating text and speech in near real-time.

Hugging Face offers an extensive collection of pre-trained models available on their Model Hub. Here are some of the key models and their general applications:

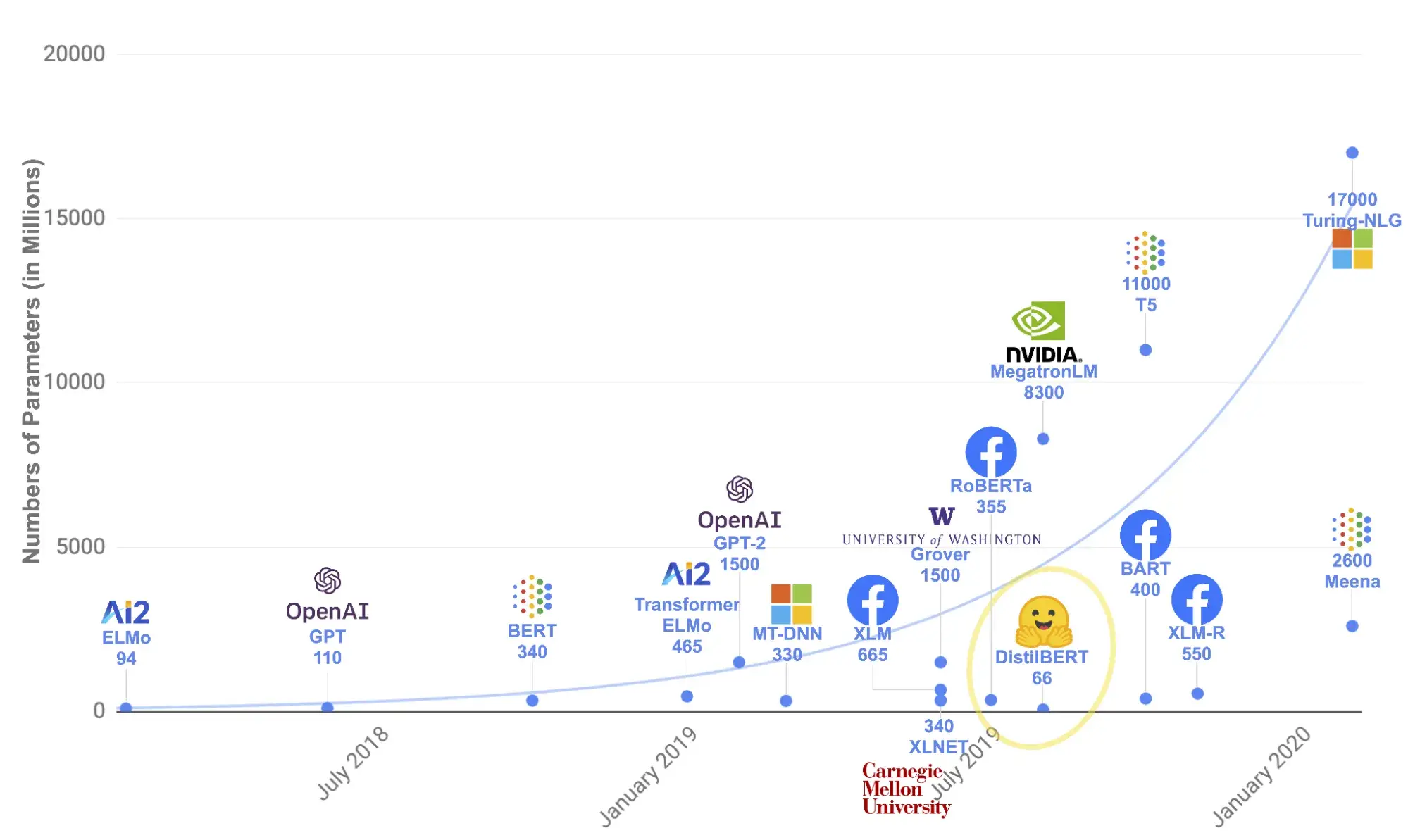

BERT (Bidirectional Encoder Representations from Transformers): Ideal for understanding the context of a word in a sentence by looking at the words before and after it. It is widely used for sentiment analysis, question answering, and named entity recognition.

GPT (Generative Pre-trained Transformer): Focused on generating coherent and contextually relevant text, making it perfect for tasks like text completion, dialogue generation, and creative writing.

- RoBERTa (Robustly Optimized BERT Pretraining Approach): An optimized version of BERT that delivers better performance on various NLP tasks by training with more data and longer sequences.

- T5 (Text-To-Text Transfer Transformer): A versatile model that converts all NLP tasks into a text-to-text format, making it flexible for many applications such as translation, summarization, and classification.

- DistilBERT: a smaller, faster, cheaper, and lighter version of BERT trained using knowledge distillation. It is ideal for deploying NLP models in resource-constrained environments.

- LUKE (Language Understanding with Knowledge-based Embeddings): Enhances transformers by incorporating structured knowledge from external knowledge bases, which improves performance on tasks like named entity recognition and relation classification.

- GPT-3: The latest and most powerful version of the GPT series, capable of generating highly coherent and contextually relevant text, performing tasks like question answering, language translation, and even creative writing with minimal fine-tuning.

Models according to their innovation timeline

Why Use Hugging Face Transformers?

There are several benefits to using Hugging Face Transformers. Some of them include:

Ease of Use: The library is designed to be user-friendly, with extensive documentation and tutorials to help users get started quickly.

Pre-trained Models: Access to a wide range of pre-trained models means you can leverage state-of-the-art NLP capabilities without needing to train models from scratch.

Versatility: The library supports multiple tasks and can be integrated with both PyTorch and TensorFlow, offering flexibility in terms of deployment.

Community and Support: A vibrant community contributes to continuous improvement and offers support through forums and GitHub.

Cost-Effective: Save on compute costs by using available models.

Set Up Your Working Environment with Google Colab

Google Colab provides a free and convenient environment to run your NLP projects using Hugging Face Transformers. Here’s a step-by-step guide to setting up and testing your models in Google Colab:

1. Go to Google Colab and sign in with your Google account.

2. Click on "New Notebook" to create a fresh Colab notebook.

3. Install the transformers library with either torch or TensorFlow in your new Colab notebook, depending on your preference.

!pip install transformers

!pip install torch # for PyTorch

# !pip install tensorflow # for TensorFlow, uncomment if using TensorFlow

4. Once the installation is complete, import the necessary libraries for Hugging Face Transformers.

from transformers import pipeline

5. Verify Installation with a Simple Example

Run a simple example to ensure everything is set up correctly. Let’s use a pre-trained sentiment analysis model to test the setup.

classifier = pipeline('sentiment-analysis')

result = classifier('I love using Hugging Face Transformers!')

print(result)

If everything is set up correctly, you should see an output indicating the sentiment of the input text.

[{'label': 'POSITIVE', 'score': 0.9998766183853149}]

Choosing the Right Pre-trained Model for Your Project

Selecting the appropriate transformer model for your project is important to achieving the best results. The choice depends on the specific task you aim to accomplish. Here are some guidelines to help you choose the right model:

Text Generation: GPT-3 or GPT-2 are suitable choices for generating coherent and contextually relevant text, and they are known for their text generation capabilities.

Question Answering: BERT, RoBERTa, or ALBERT are excellent choices for extracting answers from a given context due to their strong understanding.

Sentiment Analysis: Models like DistilBERT or BERT are highly effective in determining a text's sentiment because they can understand context and nuances in language.

Common NLP Tasks with Hugging Face Transformers

Now that you better understand Transformers and the Hugging Face platform, we will walk you through the following real-world scenarios: language translation, summarization, sentiment analysis, and question-answering.

Language Translation

Language translation converts text from one language to another, maintaining the original meaning. This is particularly useful in multilingual communication and document translation.

Example: English to German Translation

from transformers import pipeline

text = "translate English to German: Hello, How are you?"

translator = pipeline(task="translation", model="google-t5/t5-small")

translator(text)

Output:

"Hallo, wie geht es Ihnen?"

Summarization

Summarization creates a shorter version of a text from a longer one while preserving most of the original document's meaning. It is a sequence-to-sequence task; it outputs a shorter text sequence than the input.

Example: Text Summarization

from transformers import pipeline

summarizer = pipeline(task="summarization")

summarizer(

"In this work, we presented the Transformer, the first sequence transduction model based entirely on attention, replacing the recurrent layers most commonly used in encoder-decoder architectures with multi-headed self-attention. For translation tasks, the Transformer can be trained significantly faster than architectures based on recurrent or convolutional layers. On both WMT 2014 English-to-German and WMT 2014 English-to-French translation tasks, we achieve a new state of the art. In the former task our best model outperforms even all previously reported ensembles."

)

Output:

[{'summary_text': ' The Transformer is the first sequence transduction model based entirely on attention . It replaces the recurrent layers most commonly used in encoder-decoder architectures with multi-headed self-attention . For translation tasks, the Transformer can be trained significantly faster than architectures based on recurrent or convolutional layers .'}]

Question Answering

Question answering involves extracting answers from a given context, which is useful in search engines, virtual assistants, and customer support.

Example: Question Answering

from transformers import pipeline

question_answerer = pipeline("question-answering")

question_answerer(

question="Where do I work?",

context="My name is Sylvain and I work at Hugging Face in Brooklyn",

)

Output:

{'score': 0.6385916471481323, 'start': 33, 'end': 45, 'answer': 'Hugging Face'}

Sentiment Analysis

Sentiment analysis determines the emotional tone behind a series of words to understand the attitudes, opinions, and emotions expressed within the text.

Example: Sentiment Analysis

from transformers import pipeline

sentiment_analyzer = pipeline('sentiment-analysis')

result = sentiment_analyzer("I love using Hugging Face Transformers!")

print(result)

Output:

[{'label': 'POSITIVE', 'score': 0.9998}]

Wrapping Up

Hugging Face Transformers offers a powerful library for NLP projects, simplifying the process from model selection and fine-tuning to deployment. Following this guide, you have learned an overview of Natural Language Processing, Hugging Face, and the Transformers Library. With this knowledge, you can effectively implement advanced NLP models and take your applications to new heights.

Frequently Asked Questions

What role does natural language understanding play in interactive voice response systems?

Natural language understanding is crucial in interactive voice response systems, enabling them to comprehend and process human language as users interact. This technology allows for more accurate responses to user asks, enhancing the effectiveness of voice-activated customer service solutions.

How can natural language processing (NLP) be used to improve customer satisfaction and maximize profits?

NLP can improve customer satisfaction by enabling more effective communication through chatbots and customer service tools, offering personalized recommendations and support. By understanding and responding to customer needs efficiently, businesses can enhance customer experience, leading to increased loyalty and sales, which in turn maximizes profits.

How does Machine Learning assist in inventory management for a dropshipping business?

Machine Learning assists in inventory management by analysing sales data, seasonal trends, and market changes to predict inventory needs, thereby helping maintain optimal stock levels and reducing the risks of overstocking or stockouts.

How does voice recognition technology work with smart devices?

Voice recognition technology uses natural language processing techniques and machine learning to understand and interpret human language, allowing smart devices to respond to spoken commands accurately.

Gift Egwuenu is a developer and content creator based in the Netherlands, She has worked in tech for over 4 years with experience in web development. Her work and focus are on helping people navigate the tech industry by sharing her work and experience in web development, career advice, and developer lifestyle videos.

View all posts by Gift Egwuenu