In today’s digitalized society, statistics show that an average number of people spend 3 hours and 15 minutes online every day, while 1 in 5 smartphone users spend 4.5 hours on their phones daily. This statistic is why smart businesses take online representation seriously.

In a 2023 survey, according to Forbes, 71% of businesses were recorded as having a website. However, for businesses, having a website or an online presence is not the ultimate solution to getting customers. The question is, How can you beat competitors in the same market and come up top as the highest-ranked business in your industry when users perform a search?

Businesses are consistently seeking ways to optimize their web pages. Ranking high in the search engine result pages [SERP] is possible if you understand how to use Search Engine Optimization [SEO] to your advantage.

SEO (Search engine optimization) is an important concept for website visibility. It’s a common term used for online businesses. When working with content, Search engine optimization [SEO] enhances the user experience, among many other reasons that we'll briefly highlight. Your website needs to be Search engine optimized to achieve growth and sustainability.

To make it easy for the search engine bots to crawl through your most important pages, there’s an important component of search engine optimization called the robots.txt file that serves directions. In this article, we’ll discuss how to utilize robot.txt files when working with a WordPress website.

Prerequisite:

We assume that you’re familiar with the WordPress content management system that helps users create websites easily; therefore, we wouldn’t be discussing the purpose of WordPress.

Here’s a link to our WordPress article to get you started with WordPress as an absolute beginner. . Before we dive in, we’ll go through a brief understanding of what a Robot.txt file is and then discuss how to implement it on a WordPress site.

What is a Robots.txt File?

The Robot in Robots.txt refers to software programs or bots, also known as web crawlers, deployed by search engines to crawl website pages you want to index. Examples of popular web crawlers include, e.g. Googlebot, YandexBot, Bingbot, DuckDuckGo, etc.

A robot.txt file is a file name for implementing robots exclusion protocol, which is a standard used by websites. It’s like a set of rules, and it’s a file that contains instructions that tell the search engine crawlers and web robots which URLs to access or avoid on a website.

When a new website is created, search engines send crawlers to collect the information required to index the webpage. The crawler collects information like keywords and web content and adds it to the search index. When a user performs a search, the search engine fetches the necessary information from the indexed website.

A common website where a robots.txt file is recommended is an e-commerce website. On an e-commerce website, users typically perform a search to filter through products. Every search or filter will create multiple pages on the website that will eventually increase the crawl budget (the number of pages or URLs a search engine can crawl on a website over time). This may cause search engines to ignore certain pages on the website because they are crawling irrelevant pages.

Without the robots.txt file, search engine robots will index all accessible pages and resources on your website. This may include file directories, images, pdf, excel sheets with the users' information, etc.

If you don't have a robots.txt file, too many bots might end up swarming your website, and that could seriously slow things down. But here's the catch: not every website actually needs a robots.txt file. It all depends on what your website is all about and what you want to achieve.

What is the Benefit of Using a Robots.txt File for Website Optimization?

There are several benefits to using the robots.txt file, and they are:

- Content Control & Privacy

- Structured Indexing

- Enhance SEO

- Improve User Experience

- Manage External Crawlers

Content Control and Privacy: The robot.txt file is used to specify the parts of a website that should or should not be crawled by search engine bots. This could be a file path or URL parameters.

Structured Indexing: The robots.txt file specifies pages that should be crawled and indexed. Instructions are given to search engines to index a website in an organized manner, making it easier to find relevant web content.

Enhance SEO: The robots.txt file optimizes or improves the performance of a website's SEO by preventing the indexing of web content that is not relevant when a user performs a search query.

Improve User Experience: Robots.txt file can enhance user experience in several ways, which may include; preventing crawlers from indexing duplicate content or blocking search engine crawlers from accessing file directories e.g. scripts etc.

Manage External Crawlers: The robots.txt file controls the behavior of other web crawlers by instructing the bots on whether they can crawl or index the website's content.

Crawl Delay: Robots.txt file prevents servers from being overloaded by search engine crawlers with multiple contents simultaneously.

Deconstructing the Syntax of robots.txt Files

Let’s break down the syntax of the robots.txt file, which is the key to controlling web crawler access and optimizing website visibility. Through this, we will be shedding light on its significance in shaping a website's interaction with the virtual world.

User-Agent: This is used to call out specific search engine crawlers. The search engine crawler initially searches for the robots.txt file located in the root folder of your website before beginning to crawl. It’ll search the text file to see if it’s being called. If it is, it’ll read whether it’s allowed to access or not.

Disallow Rule: This tells the user agent not to crawl certain parts of a website. You can apply as many “disallow” rules as you like, but you cannot add more than one command per line.

Allow Rule: Allows the user-agent access to a page or its sub-folders even if the parent folder is disallowed.

Crawl Delay: This tells the crawler to wait for some seconds before loading and crawling pages on a site.

Sitemap: This tells the search engine crawler where the XML sitemap is located.

/ (forward slash): This is the file path separator.

(Asterix): Represents a general rule that affects all user agents or everything related to certain criteria.

#: This represents comments

$: This specifies the rule that follows after it is applied to a particular user agent.

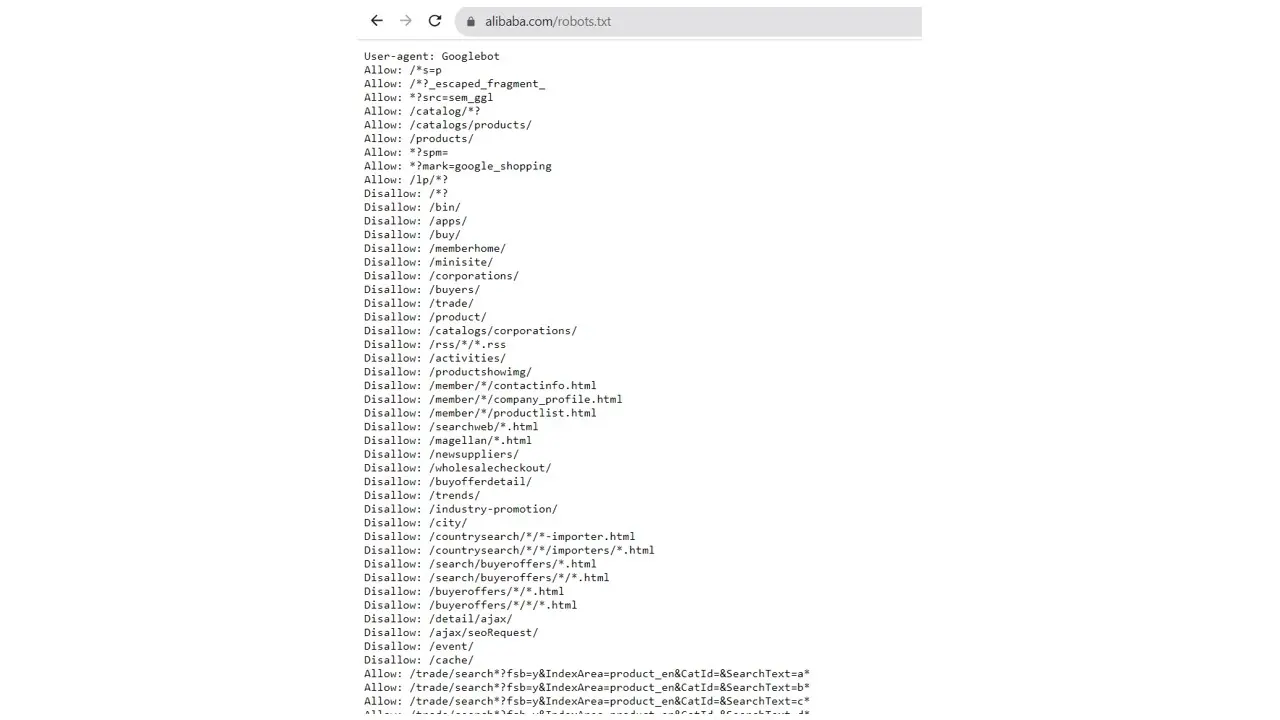

In this example, we’ll be looking up the Alibaba.com robot.txt file. Different rules can be set based on what your site needs, in this case, the rules have allow and disallow directives followed by a directory/directories.

In the above image, the user-agent declares which bot the rules apply to. We can see that the rule is created for the search bot “Googlebot”. Allow tells Googlebot what it can crawl and disallow tells it what it shouldn’t access.

How to Use Word Press's Robots.txt File

WordPress creates a Robots.txt file by default, which allows web crawlers to index your site. It can be found in the root directory of your WordPress site. Creating a robots.txt file on your website is simple, and WordPress offers two major methods for generating one. Which are;

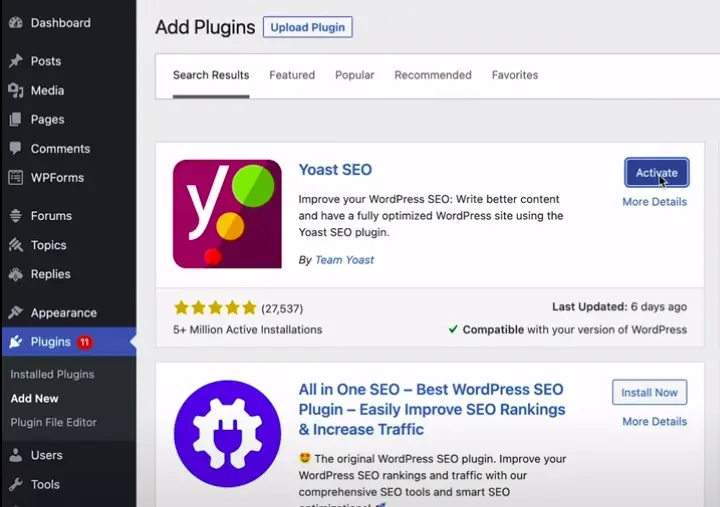

1. Using Plugins: In WordPress, you have access to different types of plugins to control and customize your Robots.txt file. Examples of plugins include:

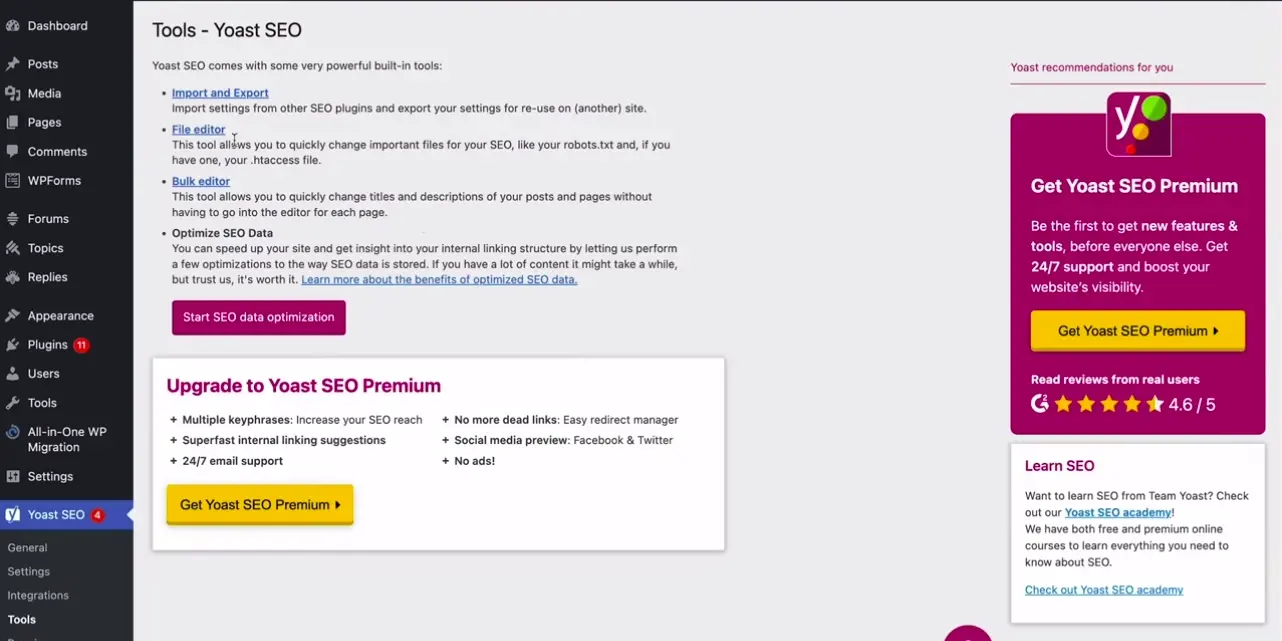

- Using Yoast SEO: Go to plugins in your WordPress admin and search for Yoast SEO

- Install and activate the Yoast SEO plugin.

- Click on Yoast, and it’ll display a list of options.

- Click on the file editor

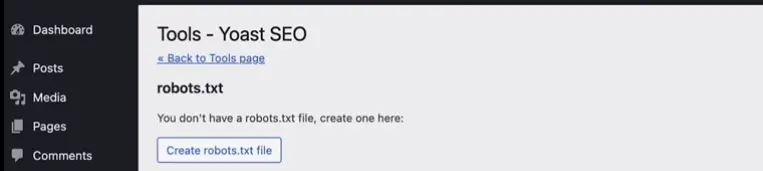

- Click the Create robots.txt file button

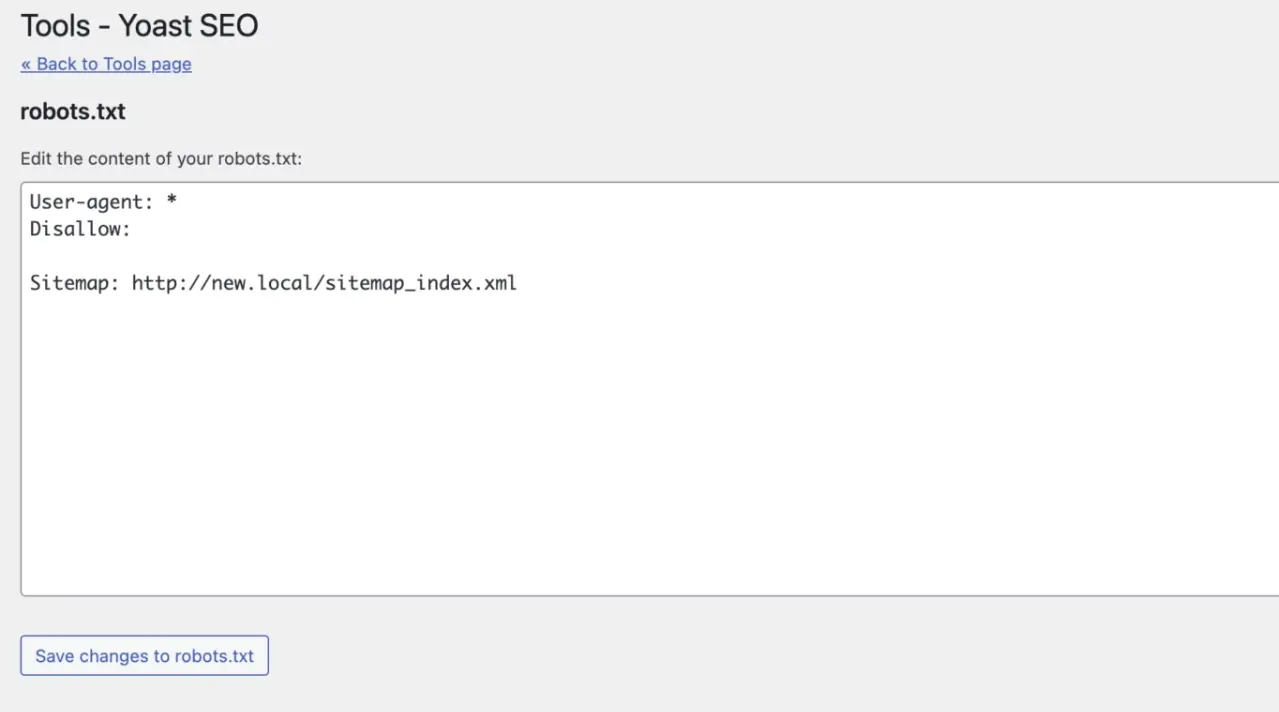

- Edit file generated by Yoast

Here we can set the rules to disallow access to our admin folder and allow access to a particular document in the folder.

If you write this rule;

user-agent : *

disallow: /

This means that you’re blocking all bots from crawling your entire site. If you leave the disallow blank without the /, it means you’re allowing all search engine bots to crawl your entire site.

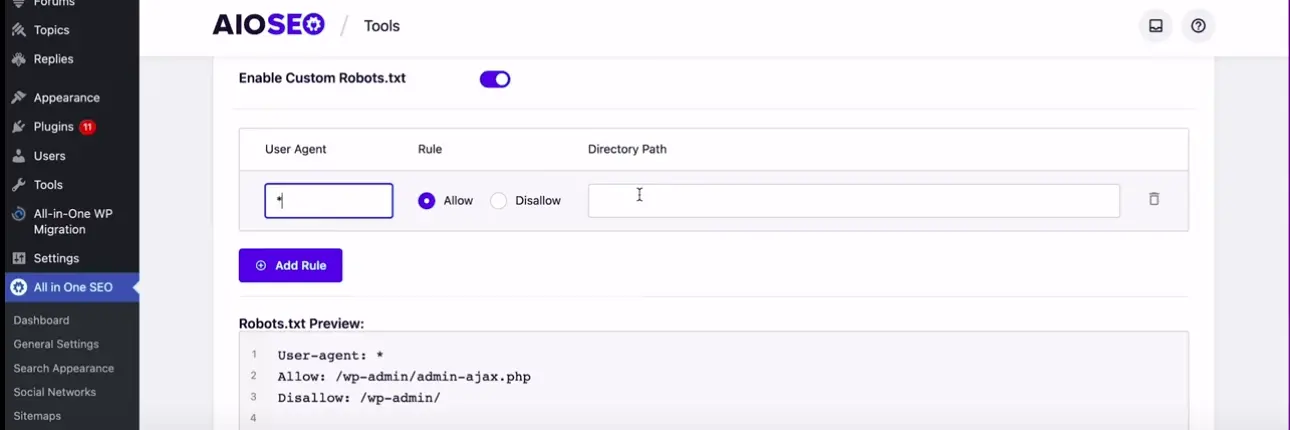

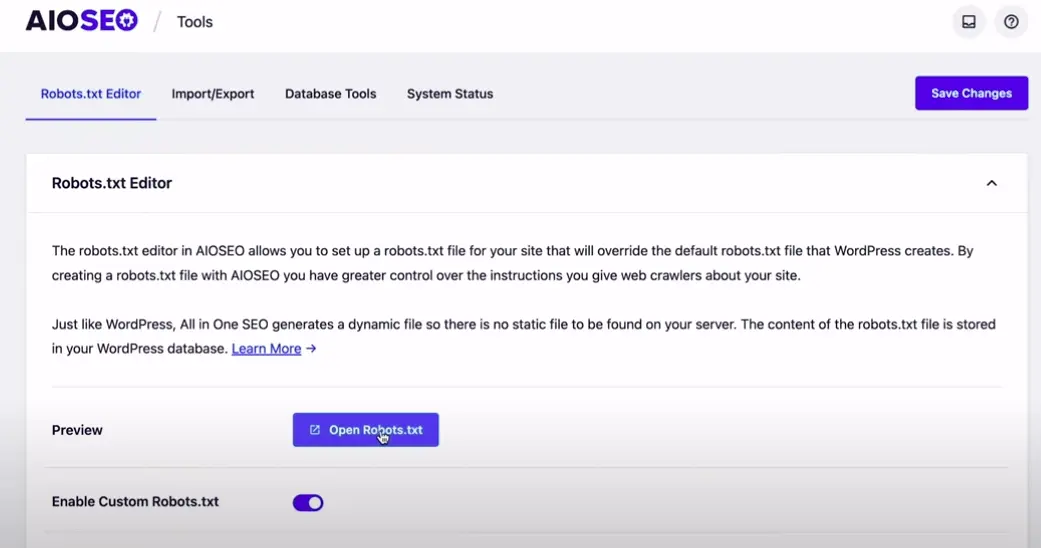

- Using AIOSEO: To activate all-in-one SEO, search for AIOSEO in the plugins and activate, and enable custom robots.txt file. AIOSEO interface is user-friendly

In the image, we have the option for user agent, rule, and directory path. There’s also the option to add more rules;, after you’re done adding all the rules, click on the save changes button. To view your robots.txt file, click on the open robots.txt file button

2. Manual EDIT (FTP Client): To manually edit a Robots.txt file, you can use the FTP (File transfer protocol). FTP is a network protocol used for transferring files between a client and server over a computer network. You can use a FTP client to upload the robots.txt file. First, you must connect your website to an FTP client, e.g., filezilla. On your local computer, create a file named Robots.txt and open it in any text editor where you can add your custom rules. Navigate to the public HTML folder in the FTP client and upload the Robots.txt file from your local computer to the web server by simply dropping it into the folder.

Summary

The robots.txt file controls parts of the website you want accessible to web crawlers. If you do not need to restrict any part of your website from search engine bots, then you do not have to worry about it. It’s also important to test the Robots.txt file anytime the content or web structure is changed; this ensures that the necessary contents are targeted accordingly. For business websites and page owners, the robots.txt file manages web crawler access. Therefore, if your site changes regularly, you can check it with Google Search Console for optimized performance monitoring.

Frequently Asked Questions

What are robots.txt files and how do they relate to web crawlers?

"Robots.txt" files are used to communicate with web crawlers, providing guidelines on what content can be crawled and how web crawlers should behave on a website.

How do web crawlers contribute to search engine results?

Web crawlers index web pages by gathering data from websites and creating searchable indexes. When users perform search queries, search engines use these indexes to retrieve relevant web pages and present them in search results. Web crawlers play a critical role in keeping the search engine's index up-to-date and comprehensive.

What are the implications of shared hosting on SEO and website rankings?

Slow loading speeds and frequent downtimes on shared hosting can negatively impact user experience and potentially affect SEO rankings.

How web hosting can affect SEO?

If you choose a web hosting company that isn’t that good, your site will load more slowly for visitors. Besides providing a bad user experience for your visitors, search engines also penalize slow sites, which makes your rankings in results go down.

Jessica Agorye is a developer based in Lagos, Nigeria. A witty creative with a love for life, she is dedicated to sharing insights and inspiring others through her writing. With over 5 years of writing experience, she believes that content is king.

View all posts by Jessica Agorye