In machine learning, a feature is any measurable property of data that helps a model make predictions.

Think of it as the building block of a model — what it learns from to recognize patterns and make decisions. The quality and relevance of features directly impact how well a model performs. The right features can boost accuracy and efficiency, while poorly chosen ones can lead to weak predictions.

In this article, we’ll break down what features are, the different types you’ll encounter, and why they matter. We’ll also dive into feature engineering, selection techniques, and the common challenges data scientists face when working with features.

How Features Shape Machine Learning Models

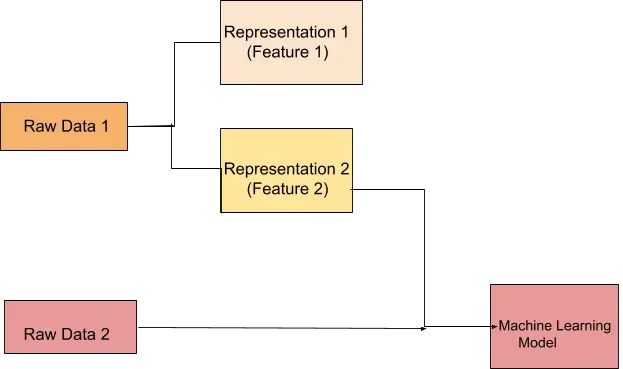

Features are the foundation of machine learning models. They determine what the model learns and how well it performs. The type of features used depends on the nature of the data.

In text-based models, features capture word patterns and structures. A spam detection system, for example, might track how often words like "discount" or "offer" appear in an email. Sentiment analysis models may use a sentiment score, assigning a number to indicate whether a text is positive, neutral, or negative. More advanced techniques like TF-IDF (Term Frequency-Inverse Document Frequency) and N-grams help identify important words within a document.

For image data, features take an entirely different form. Instead of words, models analyze pixel intensity, edges, textures, and color distributions. A facial recognition system detects unique facial structures, while deep learning models automatically extract complex patterns using convolutional neural networks (CNNs).

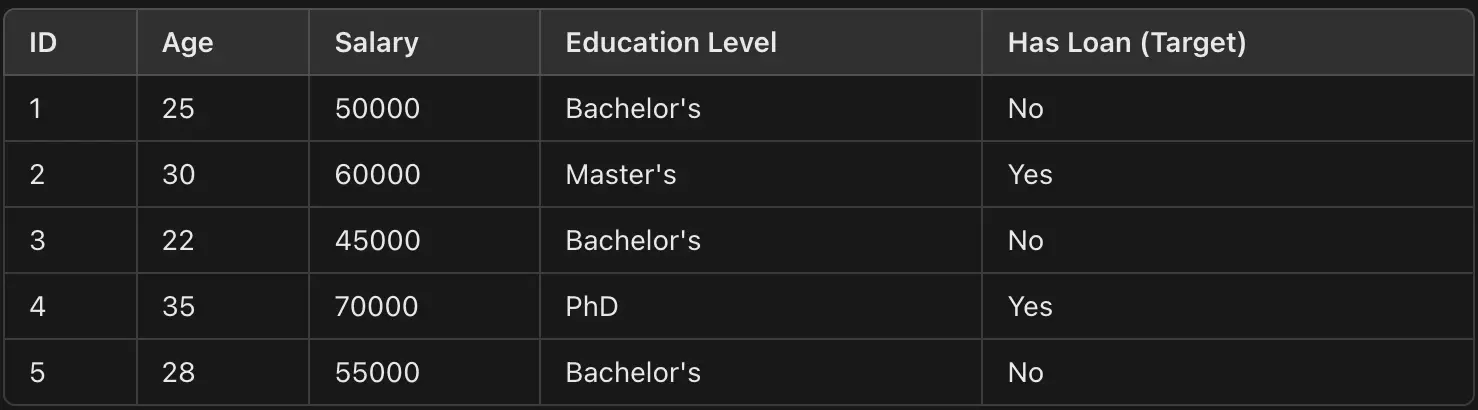

When working with structured tabular data such as spreadsheets, features are usually numerical or categorical variables. In a house price prediction model, for example, key features might include square footage, number of bedrooms, and location. Some numerical values, like income, may require transformations (e.g., log scaling) to improve model performance. Meanwhile, categorical features, such as job title or city, often need encoding so that machine learning algorithms can process them properly.

Here is an example of a tabular dataset with labeled features and a target variable:

Why Choosing the Right Features Matters

Not all features contribute equally to a model's performance. Poorly chosen features can lead to:

Overfitting: When the model memorizes patterns that don’t generalize well to new data.

Underfitting: When the model lacks important patterns, leading to poor predictions.

Computational Inefficiency: Unnecessary features increase processing time, especially in deep learning models.

Consider a spam detection model. If it includes the email sender's name as a feature, it likely won’t be useful. However, analyzing the presence of certain words such as "free trial" or "limited time offer" provides a much stronger signal for classification.

Features as the Model’s Input Variables

At its core, a machine learning model learns from features to make predictions. Selecting and engineering the right features is critical to success in every problem.

For instance, in a house price prediction model, features like square footage, number of bedrooms, and neighborhood directly impact the predicted price. In a fraud detection system, analyzing transaction amount, location, and user behavior history helps flag suspicious activities.

By carefully selecting, engineering, and refining features, data scientists can dramatically improve model accuracy, efficiency, and overall performance.

Types of Features in Machine Learning

Features in machine learning come in different forms depending on the type of data they represent. Understanding these distinctions is crucial because each type requires different preprocessing and transformation techniques to ensure the model learns effectively.

Numerical Features

Numerical features represent measurable quantities and can be either continuous or discrete.

Continuous numerical features can take any real value within a range. Think of measurements like height, temperature, weight, or salary, which can have decimal values.

Discrete numerical features are countable and take distinct values. Examples include the number of children in a family, rooms in a house, or purchases made, all of which are whole numbers that can’t be split into fractions.

Categorical Features

Categorical features represent distinct groups or labels and come in two main types:

Nominal features have no inherent order. Examples include gender (male, female), car brands (Toyota, Ford, BMW), or countries (USA, Canada, Germany). These categories are different but are not ranked in any way.

Ordinal features, on the other hand, have a meaningful order but no fixed interval between values. A good example is education level (high school < bachelor’s < master’s) or customer satisfaction (low < medium < high). There’s a clear ranking, but the difference between levels isn’t necessarily equal.

Boolean Features

Boolean features are the simplest type. They have only two possible values, typically represented as True/False or 1/0. These are useful when working with binary decisions, such as:

Did a customer make a purchase? (Yes/No → 1/0)

Was a loan application approved? (True/False → 1/0)

Is an email classified as spam? (Spam/Not Spam → 1/0)

Derived (Engineered) Features

Sometimes, the most useful features don’t exist in the raw data, they need to be created. Feature engineering involves transforming existing features or combining them to create new, more informative ones. For example:

BMI (Body Mass Index) = weight (kg) / height² (m²)

Age group, derived from the date of birth (e.g., "18-25", "26-35").

Price per square foot, calculated by dividing total price by area, is useful in real estate models.

Temporal Features

Time-related features are critical for models that analyze trends, seasonality, or time-series data. These features can help capture important patterns in data that change over time. Common examples include:

Timestamps, such as "2025-02-17 10:30:00", are useful for event tracking.

Day of the week, which can help identify patterns (e.g., sales might be higher on weekends).

Month or season, relevant for seasonal trends (e.g., winter clothing sales peak in December).

Elapsed time, such as the number of days since a customer’s last purchase, can help predict customer behavior.

Common Feature Engineering Techniques

Feature engineering involves various techniques to extract, modify, and optimize features for better model performance.

Feature Transformation

Sometimes, raw features need to be adjusted to better represent patterns in the data. Transformation techniques help scale, reshape, or create new meaningful representations:

Normalization (Min-Max Scaling): Rescales values between 0 and 1, ensuring they stay within a fixed range. Useful when features have different units or scales.

Standardization (Z-score Scaling): Centers data around a mean of 0 with a standard deviation of 1, making it easier for models to learn from normally distributed data.

Log Transformation: Applies a logarithmic scale to deal with skewed distributions, helping stabilize variance and improving linear relationships.

Polynomial Features: Generates new features by combining existing ones, such as adding x² or x³ terms to capture non-linear patterns.

Feature Encoding (For Categorical Data)

Machine learning models can’t directly process categorical data, so encoding is necessary to convert it into numerical form:

One-Hot Encoding: Converts categories into separate binary columns. For example, "Red" and "Blue" become

[1,0]and[0,1], respectively.Label Encoding: Assigns integer values to categories, such as "Low" →

0, "Medium" →1, "High" →2.Ordinal Encoding: Similar to label encoding but maintains order. It is useful for features like education levels or customer satisfaction scores.

Feature Extraction

Feature extraction helps reduce dimensionality or transform raw data into a more useful format:

Principal Component Analysis (PCA): Identifies key features (principal components) and reduces dimensionality while preserving important information.

Word Embeddings (NLP Models): Converts text into numerical vectors using methods like Word2Vec, TF-IDF, or BERT embeddings to capture meaning.

Edge Detection (Image Processing): Extracts key visual features by detecting edges, patterns, and textures in images.

Feature Selection

Not all features contribute equally; some add noise or redundancy. Feature selection techniques help choose the most important ones:

Variance Threshold: Removes features with low variance, as they contribute little to the model.

Correlation Analysis: Eliminates highly correlated features to avoid redundancy and multicollinearity.

Recursive Feature Elimination (RFE): Iteratively removes the least important features to improve performance.

LASSO Regularization: Shrinks less important feature weights to zero, effectively removing them from the model.

Role of Features in Different Machine Learning Models

The way features are used varies depending on the type of machine learning model. Some models require careful feature selection and engineering, while others can automatically extract relevant features from raw data. Let’s break it down by learning type.

Supervised Learning

Supervised learning models learn from labeled data, meaning they use features to establish a relationship between input variables and the target outcome. These models are broadly categorized into regression and classification tasks.

Regression Models (e.g., Linear Regression, Decision Trees): These models predict continuous values based on numerical and categorical features. For example, a house price prediction model might use features like square footage, number of bedrooms, and location to estimate a home’s price.

Classification Models (e.g., Logistic Regression, SVM, Random Forests): These models classify data into categories. Well-engineered features are essential to help the model distinguish between different classes. For instance, in a spam detection system, features like word frequency, sender reputation, and message length help classify emails as spam or not spam.

Unsupervised Learning

Unsupervised learning models don’t rely on labeled data. Instead, they identify hidden patterns and structures in the data by analyzing feature similarities.

Clustering (e.g., K-Means, Hierarchical Clustering): These models use features to group similar data points together. In customer segmentation, for example, features like purchase history, browsing behavior, and location help group customers with similar buying habits.

Dimensionality Reduction (e.g., PCA, t-SNE): When dealing with high-dimensional data, these techniques extract the most informative features while discarding redundant ones. For example, Principal Component Analysis (PCA) is used in image compression to reduce pixel data while retaining essential visual features.

Deep Learning Models

Deep learning models, such as neural networks, handle feature extraction differently. Instead of relying on manually selected features, they learn hierarchical representations directly from raw data.

Neural Networks (e.g., CNNs, RNNs, Transformers): These models automatically extract high-level features. A Convolutional Neural Network (CNN), for instance, detects edges, shapes, and textures in images, while a Recurrent Neural Network (RNN) processes sequential features in text, such as sentence structures.

Feature Embeddings (e.g., Word2Vec, BERT): Some deep learning models convert categorical and textual data into dense numerical representations. NeweggWord2Vec and NeweggBERT embeddings, for example, transform words into vector space representations, allowing NLP models to understand word relationships more effectively.

What’s Next?

That’s a wrap on features in machine learning! The quality of your features directly impacts your model’s performance. Well-engineered features can make even simple models powerful, while poor ones can lead to overfitting and inefficiency.

Moving forward, focus on practicing feature engineering and selection techniques to refine your models. Experiment with different transformations, encoding methods, and selection strategies to see what works best for your data. The more hands-on experience you gain, the better your models will become.

Keep exploring, keep iterating, and let your features do the heavy lifting!

Frequently Asked Questions

In what ways are machine learning solutions applied in financial services?

Machine learning solutions in financial services utilise advanced machine learning techniques to perform complex tasks such as predictive maintenance, risk assessment, and fraud detection. By analyzing vast amounts of data points using statistical techniques, these machine learning models identify underlying patterns that help financial institutions make predictive and informed decisions. This not only enhances customer support but also improves overall efficiency and security in the financial sector.

How does Machine Learning assist in inventory management for a dropshipping business?

Machine Learning assists in inventory management by analysing sales data, seasonal trends, and market changes to predict inventory needs, thereby helping maintain optimal stock levels and reducing the risks of overstocking or stockouts.

How can predictive analysis and sentiment analysis benefit from Azure Machine Learning?

Azure Machine Learning accelerates predictive and sentiment analysis projects by offering pre-built models, attached compute resources for training, and tools for hyperparameter tuning to improve accuracy, all as a free extension to developers' preferred machine learning tools.

What is the role of artificial intelligence and machine learning in ethical hacking?

AI and machine learning enhance ethical hacking by automating tasks, identifying patterns, and improving threat detection. Ethical hackers leverage these technologies to analyze large datasets and enhance security measures.

Joel Olawanle is a Software Engineer and Technical Writer with over three years of experience helping companies communicate their products effectively through technical articles.

View all posts by Joel Olawanle