In recent years, machine learning has experienced remarkable advancements, and transfer learning is one of the most influential techniques contributing to this progress.

In this article, you will learn about transfer learning, its importance, different approaches to transfer learning, its application, and, lastly, the challenges associated with transfer learning.

What is Transfer Learning?

To understand transfer learning simply, it is crucial to first grasp a key concept called "domain."

A domain represents the properties of a dataset including its possible input features and the probability distribution of that data. The input features can be pixel values in images or words in text. For example, a domain could be colored photos of animals, where the feature space represents RGB pixel values, and the distribution could relate to the angle of the image. Two domains differ if their feature space or their probability distribution differs.

Transfer learning involves leveraging foundational knowledge from one or more source domains to boost learning performance in a related or different target domain.

In transfer learning, the target domain does not have to be similar to the domain on which the pre-trained model was trained (source). However, transfer learning from a similar or related domain is usually more common.

Importance of Transfer Learning in Modern Machine Learning?

With advancements in machine learning, transfer learning has emerged as a critical technique for addressing various challenges in traditional machine learning. Some of these include:

Eliminates Redundant Models: Traditional ML models are built for single tasks, leading to inefficiency when similar tasks require separate models. Transfer learning solves this by enabling a single pretrained model to adapt to multiple tasks. For example, BERT, pretrained on general text, can be fine-tuned for legal document analysis or summarization.

Works with Limited Data: Training a high-performing model requires large, high-quality datasets, which are costly and time-consuming to collect. Transfer learning mitigates this by using knowledge from a pretrained model. For instance, a model trained on natural images (e.g., ImageNet) can be adapted to medical imaging tasks like tumor detection, even with limited labeled data.

**Provides a Strong Starting Point: Pretrained models learn general features from vast datasets, making them effective in new domains with minimal training. A model trained on Wikipedia, for example, learns contextual meanings and grammatical structures, allowing it to perform tasks like sentiment analysis even without fine-tuning.

Speeds Up Training: Training a model from scratch for a specific task is resource-intensive. Transfer learning significantly reduces training time by starting from a model that has already learned useful patterns, ensuring faster convergence and lower computational costs.

How Transfer Learning Works

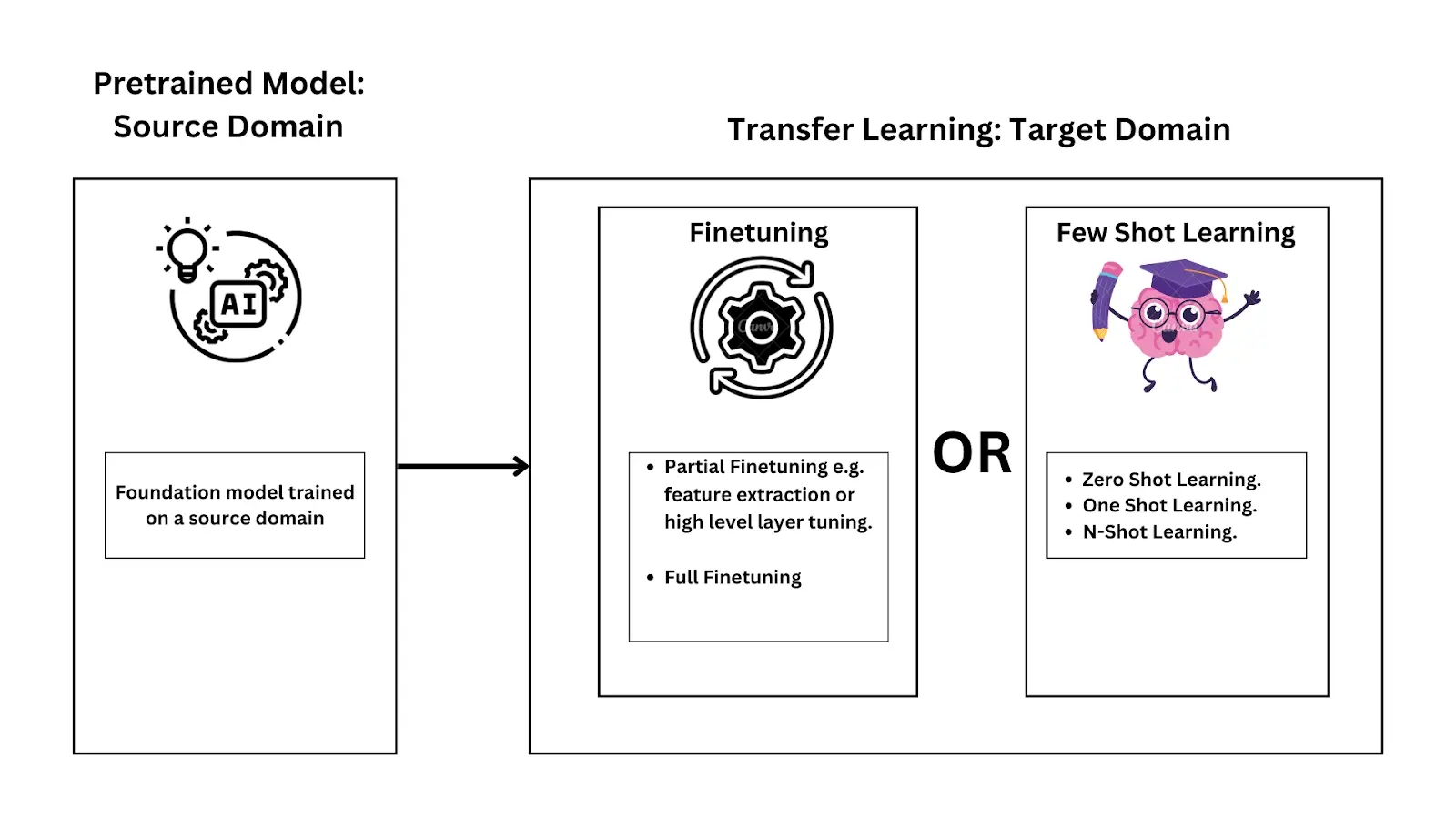

Transfer learning allows a model to apply knowledge from a previously learned task to a new, related one. Instead of training a model from scratch, we use a pretrained model — a neural network that has already learned useful patterns from a large dataset.

This significantly reduces the amount of data and training time required for the new task.

But how does transfer learning actually work? Let’s break it down by understanding pretrained models, fine-tuning, and few-shot learning—the key building blocks of this approach.

Pretrained Models

A pretrained model is a neural network trained on a large dataset, such as ImageNet (for image classification) or Wikipedia (for text processing). These models learn general patterns, like edges and shapes in images or grammar and sentence structures in text, which can then be transferred to new tasks.

Some pretrained models are trained on domain-specific datasets, making them particularly useful for specialized applications. For example, a language model trained on medical research papers can be fine-tuned for healthcare-related tasks, such as diagnosing diseases from patient records.

Once we have a pretrained model, there are different ways to adapt it to a new task. Two of the most common approaches are fine-tuning and few-shot learning.

Fine-Tuning: Adapting a Pretrained Model

Fine-tuning is a technique in which a pre-trained model is adjusted to perform better on a specific task by modifying its weights. This allows the model to leverage its existing knowledge while adapting to the new domain.

There are two main types of fine-tuning:

Full fine-tuning: All layers of the model are updated, allowing it to fully adapt to the new task. This approach is more computationally expensive but can achieve high accuracy.

Partial fine-tuning: Some layers are "frozen" (kept unchanged), while only certain layers — usually the later ones — are fine-tuned. This is more efficient and is commonly used when the source and target tasks are similar.

For example, in an image classification task, a model trained on ImageNet might keep its early layers (which detect basic shapes and textures) while only updating its final layers to classify new categories.

Few-Shot Learning: Learning from Minimal Data

Few-shot learning allows a model to generalize to a new task with very little training data. Instead of retraining the model, a few labeled examples are provided during inference to guide its predictions. This technique has gained popularity with large language models (LLMs), such as GPT-based models, which can perform tasks with minimal examples.

Few-shot learning comes in three variations:

Zero-shot learning: The model performs a task without seeing any labeled examples. For example, a sentiment analysis model classifies a movie review as positive or negative without any prior examples.

One-shot learning: The model is given just one example to learn from. For example, recognizing a new person's face after seeing only a single labeled image.

N-shot learning: The model is trained with a few examples (N > 1). For example, a chatbot improves its responses after seeing 5–10 example interactions.

Few-shot learning is particularly useful in natural language processing (NLP), where models can be prompted with a few examples instead of being fully retrained for each task.

Step-by-Step Guide: Implementing Transfer Learning

Here’s a structured step-by-step guide to implementing transfer learning effectively.

1. Select a Pretrained Model

The first step is choosing the right pretrained model for your task. Since transfer learning depends on transferring knowledge from one domain to another, the model you select should have been trained on a dataset that shares relevant patterns with your target task.

How to Choose the Right Pretrained Model

Computer Vision: If working on image-related tasks, models like EfficientNet, ResNet, and VGG16 (trained on ImageNet) are great choices.

Natural Language Processing (NLP): Transformer-based models like BERT, GPT, and T5 are effective for text-related applications.

Speech Recognition: Wav2Vec 2.0, pretrained on diverse languages and accents, is useful for speech-to-text tasks.

If the pretrained model was trained on a domain too different from your target data, transfer learning might not work well — this is called negative transfer (more on this later).

2. Prepare the Dataset

To use a pretrained model, your target dataset must be formatted correctly to match the model’s input requirements.

For example:

EfficientNet-B0, trained on ImageNet, requires images to be 224×224 pixels. If your images are a different size, you’ll need to resize them before training.

BERT expects input text to be tokenized in a specific way (using WordPiece tokenization).

Speech models may require audio files in a specific sample rate or format.

Data preprocessing ensures the pretrained model can effectively process and learn from the target dataset.

3. Adapt the Model: Fine-Tuning vs. Few-Shot Learning

Once your data is ready, the next step is adapting the pretrained model to your specific task. There are two main approaches: fine-tuning and few-shot learning.

Fine-tuning involves updating the pretrained model’s weights to align with your new dataset. There are two ways to do this. Full fine-tuning updates all model layers, allowing the model to fully adapt to the new task, but this requires high computational power. Partial fine-tuning, on the other hand, keeps some layers frozen while updating only specific ones, making it a more efficient approach, especially when working with limited data.

Few-shot learning is an alternative approach when training data is scarce. Instead of retraining the model, a few labeled examples are provided during inference to help guide predictions. This technique is particularly useful for tasks where collecting a large dataset is impractical, such as specialized NLP tasks or scenarios with limited computational resources.

4. Evaluate the Model’s Performance

Once the model is fine-tuned (or adapted using few-shot learning), it must be tested on unseen data to ensure it generalizes well.

Common evaluation metrics include:

Classification tasks: Accuracy, Precision, Recall, F1-score

Object detection: Mean Average Precision (mAP)

Text generation & translation: BLEU score, Perplexity

A well-tuned model should not just memorize training examples—it should accurately perform on real-world, unseen data.

Applications of Transfer Learning

Transfer learning is widely used across various fields, allowing AI models to achieve state-of-the-art results without requiring massive labeled datasets.

1. Computer Vision

Pretrained vision models, such as EfficientNet, ResNet, and VGG16, have been successfully applied to:

Medical Imaging: Detecting breast cancer, pneumonia, or retinal diseases from X-rays.

Autonomous Vehicles: Identifying pedestrians, traffic signs, and road objects.

2. Natural Language Processing (NLP)

Modern NLP models heavily rely on transfer learning. For example, BERT, pretrained on the Toronto Book Corpus and Wikipedia, has been fine-tuned for tasks like sentiment analysis, question answering, and named entity recognition.

3. Speech Recognition

Transfer learning is crucial in speech-to-text models, especially for regional accents and underrepresented languages. For example, Wav2Vec 2.0, pretrained on diverse speech datasets, can be fine-tuned to recognize low-resource languages or dialects.

Challenges of Transfer Learning

While transfer learning provides significant advantages, it also has limitations that need to be addressed.

1. Domain Mismatch (Negative Transfer)

Transfer learning works best when the source and target domains share similarities. If they are too different, the pretrained knowledge can hurt performance instead of improving it — this is known as negative transfer.

A model trained on ImageNet, which consists of natural images, may struggle when applied to medical imaging because medical scans have different textures and patterns that the model was never trained to recognize.

To avoid this, it is important to conduct a domain similarity analysis before selecting a pretrained model. If the domains are somewhat related but not identical, using partial fine-tuning to selectively update relevant layers can help the model adapt without losing useful knowledge from the original dataset.

2. Computational Cost

Fine-tuning large models like Vision Transformers (ViTs) or large language models (LLMs) such as Llama can be computationally expensive, requiring powerful hardware and long training times. This can be a major challenge, especially for those working in low-resource environments.

To address this, partial fine-tuning, where only specific layers of the model are updated, can significantly reduce computational costs. Another alternative is few-shot learning, which allows models to generalize with minimal training data and computational effort, making it a practical solution when resources are limited.

3. Overfitting on Small Datasets

Applying a large pretrained model to a small dataset can lead to overfitting, where the model memorizes noise instead of learning general patterns. This reduces its ability to perform well on new, unseen data.

One way to mitigate this is to freeze early layers of the model and fine-tune only the task-specific layers, ensuring that the model retains general knowledge while adapting to the new task.

Additionally, data augmentation, which creates variations of the existing dataset, can help by increasing the diversity of training examples and improving the model’s ability to generalize.

What’s Next?

Transfer learning has transformed machine learning by enabling models to reuse knowledge from large-scale datasets, reducing training time and the need for massive labeled data.

By selecting the right pretrained model, properly preparing the dataset, and choosing between fine-tuning or few-shot learning, we can effectively apply transfer learning across various fields, from computer vision to natural language processing and speech recognition.

Frequently Asked Questions

Will my transfer take long?

It could take several days, but this depends on which registry the domain is coming from. We'll drop you an email when it’s complete. The time taken is because of restrictions at the registry level and by ICANN.

Will my nameservers be changed on transfer?

No. You only change who the domain registrar is when you switch. All names stay the same.

Can I easily transfer my existing domain?

Yes. Transfer your domain to us and we'll even extend your renewal date by a year – or you can update your nameserver to point to our nameservers. It's that easy!

Are there any fees associated with domain transfers?

While many transfers are fee-free, check with your registrars for any potential costs. Domain expiration and renewal fees might apply.

Joel Olawanle is a Software Engineer and Technical Writer with over three years of experience helping companies communicate their products effectively through technical articles.

View all posts by Joel Olawanle