I’ve always been intrigued by those videos of robots doing seemingly impossible things—balancing, running, solving puzzles. I kept thinking, how does a machine even learn to do that? That curiosity led me to discover something called reinforcement learning.

Reinforcement learning (RL) is a type of machine learning where an agent learns by interacting with its environment. It takes actions, gets rewards or penalties, and gradually figures out what works.

Of course, this kind of learning has its own challenges - things like delayed rewards, exploration vs. exploitation, and more. We’ll get into all that.

In this article, you’ll learn what reinforcement learning really is, how it works, where it's useful, and why it’s such a big deal in AI.

What Exactly Is Reinforcement Learning?

Reinforcement learning is a way of training machines - usually called agents—to make decisions through trial and error. The agent interacts with an environment, takes an action, gets some kind of feedback (a reward or penalty), and uses that information to improve its future actions.

Think of it like teaching a dog tricks. You say “sit,” the dog tries something, and if it sits, you give it a treat. If not, nothing - or maybe a light “no.” Over time, the dog learns which behavior gets the reward. That’s basically reinforcement learning, but for machines.

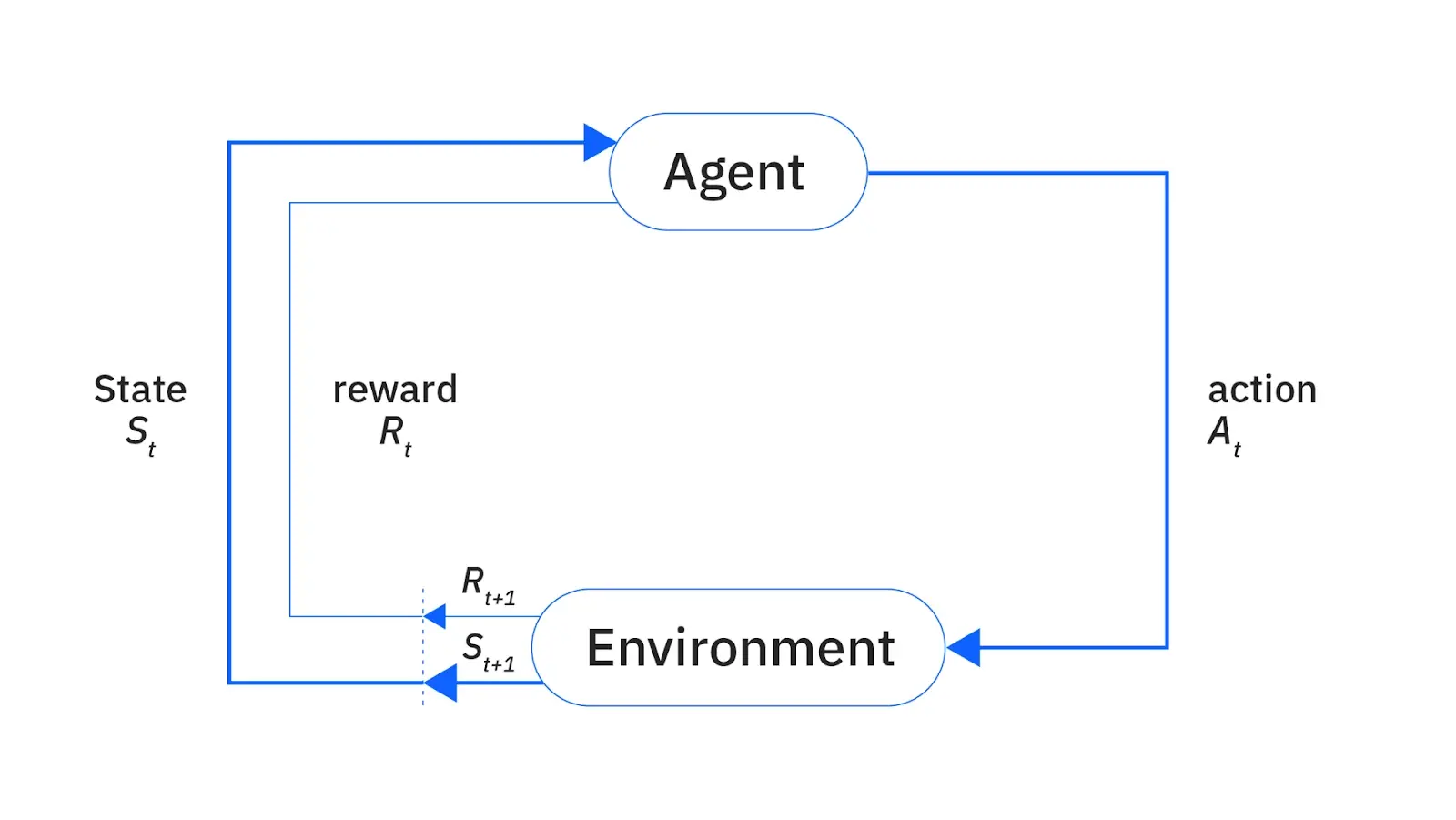

In an RL setup, there are usually four key components:

Agent – the learner or decision-maker.

Environment – everything the agent interacts with.

Action – what the agent chooses to do at any given step.

Reward – the feedback the agent gets based on its action.

State – a snapshot of the environment that helps the agent decide what to do next.

The image below shows this loop: the agent takes an action, the environment responds with a new state and a reward, and the agent uses that to adjust its behavior.

The goal is to maximize the total reward over time, not just in the short term but also in the long term - so the agent has to figure out strategies, not just react.

This loop is repeated until the agent becomes proficient at the task it's trying to solve - whether that’s balancing a pole, playing chess, or managing resources in a game.

How Does the Agent Actually Learn?

Now that you’ve seen the core structure of reinforcement learning - the agent, environment, actions, states, and rewards - the next question is: how does the agent turn all that into actual learning?

The answer lies in experience.

Instead of learning from examples like in supervised learning, the RL agent learns through trial and error. It interacts with the environment, takes actions, and slowly figures out what works by observing the consequences. If an action leads to a good outcome (a reward), the agent leans toward doing it again. If it leads to something bad (a penalty), it adjusts.

This learning loop continues, and over time, the agent starts to develop a policy - a strategy for making decisions in different situations. The policy gets better as the agent collects more experience and fine-tunes its behavior to earn more rewards in the long run.

But to learn effectively, the agent can’t just stick to what already works. It has to explore new actions too, just in case there’s something better. This balance between exploration (trying new things) and exploitation (doing what’s already known to work) is at the heart of RL’s learning process.

This results in a system that teaches itself how to act, not from labeled data, but from interacting with the world and learning what decisions bring the best results over time.

Key Concepts in Reinforcement Learning

To understand how reinforcement learning really works, it helps to zoom in on the core components. These are the building blocks that make up every RL setup, no matter how simple or complex the task.

Let’s walk through them.

Agent: The Decision Maker

The agent is the learner. It’s the part of the system that makes decisions, takes actions, and tries to improve over time. It doesn’t know much at the start - it just tries things, sees what happens, and gradually figures out what actions lead to better outcomes.

You can think of it like a player in a game. Every move it makes changes the game state and affects the final score. The better the player (agent) gets at understanding the game, the higher the reward it can earn.

Environment: The World It Interacts With

The environment is everything the agent interacts with. It defines the rules, the available actions, and the consequences of each move. It’s what gives feedback to the agent in the form of rewards and states.

In a self-driving car simulation, for example, the environment includes the road, traffic signs, weather conditions, and other cars. The environment is what reacts when the agent does something—whether that’s turning, accelerating, or crashing into a wall.

State: The Situation the Agent Is In

A state is a snapshot of what’s going on at any given moment. It’s how the environment describes the current situation to the agent.

For example, in a video game, a state might include the player’s location, speed, health level, and nearby enemies. The agent uses this information to decide what to do next.

States are critical, because the agent’s decisions depend entirely on what it knows about its current situation.

Action: What the Agent Can Do

An action is exactly what it sounds like - it’s a move the agent can make in a given state. The list of possible actions depends on the environment.

In a chess game, actions are the possible legal moves. In a warehouse robot scenario, they might be things like “turn left,” “pick up box,” or “move forward.”

The agent picks an action based on its current state and its policy (more on that in a second).

Reward: Feedback That Drives Learning

Rewards are the key to everything in reinforcement learning.

A reward is a number the agent receives after taking an action. It tells the agent how good or bad the outcome was. Positive rewards encourage the agent to repeat a behavior, while negative rewards (or penalties) discourage it. For example:

+1 for reaching a goal

-1 for crashing

0 for doing something neutral

The agent’s goal is to maximize total rewards over time, not just in one step, but across a full task or episode. This is what makes RL about strategy, not just reactions.

Policy (π): The Agent’s Strategy

A policy is the agent’s brain. It’s the function or rule the agent uses to decide what to do in any given state.

At first, the policy might be completely random. But over time, as the agent sees what works and what doesn’t, it updates its policy to favor better decisions. The better the policy, the better the agent performs.

You can think of a policy as a map:

“If I’m in state A, do action X.”

“If I’m in state B, do action Y.”

This is ultimately what the agent is trying to learn: a smart, consistent way to choose good actions in any situation.

Types of Reinforcement Learning

There’s more than one way for an agent to learn a good strategy in reinforcement learning. Depending on how the problem is framed and what kind of feedback is available, there are three main approaches:

1. Value-Based Reinforcement Learning

In value-based methods, the agent tries to estimate how good each action is in a given state. It doesn’t directly learn the best move—it learns the value of moves, and then picks the one with the highest value.

Think of it like having a cheat sheet that tells you, “If you’re in this situation, doing X usually gives good results.” Over time, the agent builds up this cheat sheet based on experience.

Q-learning is a popular example. It uses something called a Q-table, where each row is a state and each column is a possible action. The values in the table get updated constantly as the agent learns what actions lead to better rewards.

This approach works well when:

The environment is small or simple enough to track these values

The agent doesn’t need to plan far ahead, just choose the best move at each step

But in big or complex environments, the table can get massive, or impossible to store. That’s where the next approach comes in.

2. Policy-Based Reinforcement Learning

Instead of trying to guess how good each move is, policy-based methods go straight to the goal: learn the best strategy directly.

In this setup, the agent doesn’t need a value table. It trains a function (often a neural network) that takes in the current state and spits out the best action to take.

This approach is better when:

You’re dealing with continuous actions (e.g., adjusting angles or speeds)

You need smoother, more complex behaviors, like coordinating multiple robotic arms

The strategy isn’t just about short-term reward but also overall coordination

It’s also often more stable in learning, especially in noisy environments.

3. Model-Based Reinforcement Learning

Model-based RL is a bit more advanced. Here, the agent doesn’t just react- it builds an internal model of the environment and uses that model to plan ahead.

In other words, instead of just trying things and seeing what happens, the agent learns to predict what would happen if it took a certain action. Then, it uses those predictions to make smarter choices.

This is useful in scenarios where:

Interacting with the real environment is expensive, risky, or slow (like robotics or drug testing)

The agent benefits from planning ahead multiple steps

You want fewer trial-and-error loops

It’s more like playing chess in your head before making a move.

Of course, building a good model isn’t easy. If your predictions are wrong, your whole strategy might fall apart. But when done right, model-based learning can be incredibly efficient.

Where Reinforcement Learning Is Being Used

Reinforcement learning is already making real impact in the world, far beyond just playing games or running robot simulations. Let’s look at how it’s being used in practical, high-stakes environments.

Finance & Trading

In the world of financial markets, decisions have to be fast, data-driven, and constantly adaptive. RL fits perfectly.

Trading bots use reinforcement learning to decide when to buy or sell, based on market signals like price changes, news sentiment, or historical patterns. The agent is trained to maximize long-term profit, while minimizing risk, by treating the stock market like an environment it can learn to navigate.

Even more advanced setups combine RL with deep learning to process massive data streams, making strategies that outperform traditional rule-based systems.

Cybersecurity in IoT

Reinforcement learning is also being used to detect and respond to cyber threats, especially in networks of connected devices (IoT). These environments are complex and change constantly, making static security rules unreliable.

RL-based systems can learn to:

Spot unusual behavior (like sudden data spikes or access attempts)

Adjust defenses on the fly

Minimize system damage while still staying online

Instead of waiting for a human to react to an attack, the system defends itself in real-time and learns from each attempt.

Energy Management

Energy systems, like those in smart buildings or electric grids, are tricky to manage. You want to keep people comfortable, avoid waste, and adjust to supply changes (like solar energy dropping at sunset).

RL helps by learning how to optimize energy use:

Adjusting heating/cooling in real-time

Managing electric vehicle charging

Balancing loads between renewable and non-renewable sources

The agent learns patterns in usage and weather, and figures out when to conserve, when to store, and when to spend.

Robotics & Automation

Robots are a natural fit for RL because they need to act in the real world, where unpredictability is everywhere.

Whether it's a drone navigating a forest, a robotic arm assembling parts, or a warehouse bot avoiding collisions, reinforcement learning allows these systems to learn motion and decision-making without being hardcoded for every situation.

And because the feedback is real-world physical results (success or failure), RL allows the robot to adapt quickly.

These are just a few areas. Reinforcement learning is also showing up in:

Self-driving cars (lane control, merging, route planning)

Healthcare (personalized treatment recommendations)

Games (obviously - AlphaGo, Dota bots, etc.)

Wrapping Up

Reinforcement learning is one of the most exciting areas in AI, not because it mimics data, but because it learns from interaction. It trains agents to make decisions, adapt to new situations, and improve over time, all without explicit instructions.

But it’s not all smooth sailing. RL can be hard to train, slow to converge, and sensitive to reward design. Still, as research advances and computing power grows, these challenges are being chipped away.

What’s clear is that reinforcement learning isn't just a research curiosity - it’s a practical tool shaping the future of intelligent decision-making.

Frequently Asked Questions

What is Azure Machine Learning Studio?

Azure Machine Learning Studio is a fully managed cloud service that allows data scientists and machine learning engineers to easily build, train, and deploy machine learning models without needing to manage a computer cluster.

Can Azure Machine Learning Designer handle deep learning tasks?

Yes, Azure Machine Learning Designer supports deep learning tasks, allowing users to design, train, and deploy complex models like object detection using a visual interface without writing code.

How do machine learning algorithms improve facial recognition applications?

Machine learning algorithms improve facial recognition by enhancing the accuracy and efficiency of recognizing and verifying faces. These algorithms use neural networks to process and analyze facial features from images and videos, learning from data collection to accurately identify individuals even under varying conditions.

How are deep learning models and machine learning models applied in image recognition online applications?

Deep learning models and traditional machine learning algorithms are extensively applied in online image recognition applications to perform tasks like image retrieval, image segmentation, and object recognition. These models are trained to understand images, detect specific classes, and extract features, making them essential for efficient and scalable online applications that require processing and interpreting vast amounts of visual data quickly.

Joel Olawanle is a Software Engineer and Technical Writer with over three years of experience helping companies communicate their products effectively through technical articles.

View all posts by Joel Olawanle