Machine learning can seem intimidating, but it doesn’t have to be. With tools like TensorFlow, building a machine learning model is more accessible than ever.

This blog post will walk you through creating your first neural network to classify handwritten digits using the MNIST dataset. Don’t worry, we’ll explain everything in simple terms so even beginners can follow along.

Let’s get started!

What is TensorFlow?

TensorFlow is a powerful framework developed by Google for machine learning and deep learning. It provides tools to create, train, and deploy machine learning models efficiently. In simple terms, TensorFlow does the heavy lifting, like complex math operations, so you can focus on solving problems.

If you’ve ever wondered how computers can recognize faces, translate languages, or even recommend movies, TensorFlow is one of the tools that make those things possible.

What Are Neural Networks?

> A neural network is a type of machine learning model that mimics the way the human brain works. It’s made up of layers of nodes (neurons) that process data to recognize patterns and make predictions.

Here’s how it works:

Input layer: This takes the raw data (e.g., an image of a handwritten digit).

Hidden layers: These process the data, looking for patterns (e.g., edges, curves, shapes).

Output layer: This produces the final prediction (e.g., the number 7).

For example, in our project:

The input is a 28x28 grayscale image of a handwritten digit.

The output is a predicted digit between 0 and 9.

Neural networks are great at recognizing patterns in images, text, and other complex data.

Prerequisites

Before we start, here’s what you need to follow along:

- Python environment: You can either:

Install Python, Jupyter Notebook, and TensorFlow on your computer, or

Use an online platform like Google Colab (recommended for beginners) or any hosted Jupyter Notebook.

Basic Python knowledge: Familiarity with Python syntax will help, but this guide is beginner-friendly.

Internet access: Required if you’re using Google Colab or downloading datasets.

In this post, we’ll use Google Colab for simplicity, as it requires no installation and comes with TensorFlow pre-installed.

Let’s Build Your First Neural Network

As mentioned earlier, we’ll use the MNIST dataset for our neural network. But why do we need a dataset? Think of a dataset as the "textbook" that a neural network studies to learn. It provides examples for the model to analyze, along with the correct answers, helping it understand patterns and make accurate predictions.

In this project, we’re using the MNIST dataset, which is a collection of 70,000 grayscale images of handwritten digits. Here’s why it’s ideal:

Each image is 28x28 pixels—small and easy to process.

Every image is labeled with the correct digit (0–9), so the model knows what it’s trying to predict.

With this dataset, we’ll teach our neural network to recognize and classify handwritten digits. Let’s dive in!

Step 1: Import Libraries

Before we begin building the neural network, we need to import a few libraries. These libraries are pre-written tools that make it easier to work with machine learning, numerical operations, and data visualization.

Here’s what we’re importing and why:

TensorFlow: This is the core library we’ll use to build and train our neural network.

Keras (part of TensorFlow): Provides a simple interface for building deep learning models.

Matplotlib: Used to visualize the data, such as images and training results.

NumPy: Helps with numerical operations, like handling arrays of data.

Here’s the code to import these libraries:

import tensorflow as tf

from tensorflow.keras import layers, models

import matplotlib.pyplot as plt

import numpy as np

Once this is done, we’re ready to move on to the next step: loading the dataset.

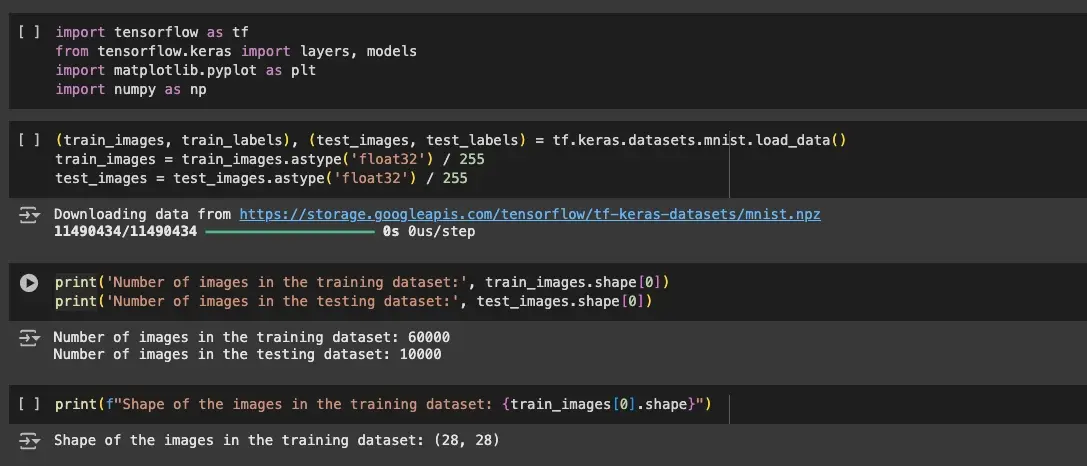

Step 2: Load and Preprocess the Dataset

To train our neural network, we are using the MNIST dataset. Let’s load and prepare the dataset for our model.

TensorFlow makes it easy to load the MNIST dataset. Here’s the code to do it:

(train_images, train_labels), (test_images, test_labels) = tf.keras.datasets.mnist.load_data()

The train_images and train_labels are 60,000 images and their corresponding labels (digits 0–9). This data will be used to train the neural network. Then the test_images and test_labels are 10,000 images and labels that we’ll use to evaluate how well the trained model performs on unseen data.

Neural networks work best with normalized data, so we need to scale the pixel values from their original range of 0–255 down to 0–1. We also convert the pixel values to floating-point numbers (float32) for better compatibility with the model.

Here’s the code to normalize the data:

train_images = train_images.astype('float32') / 255

test_images = test_images.astype('float32') / 255

This is important because neural networks train faster and more efficiently when input data is scaled to a uniform range (0 to 1) and reduces the risk of instability during training.

Now that the dataset is loaded and normalized, let’s inspect it to understand its structure. We’ll print the shape of the datasets and look at the shape of an individual image.

print(f"Training data shape: {train_images.shape}, Training labels shape: {train_labels.shape}")

print(f"Testing data shape: {test_images.shape}, Testing labels shape: {test_labels.shape}")

print(f"Shape of the images in the training dataset: {train_images[0].shape}")

Here is what the model now looks like:

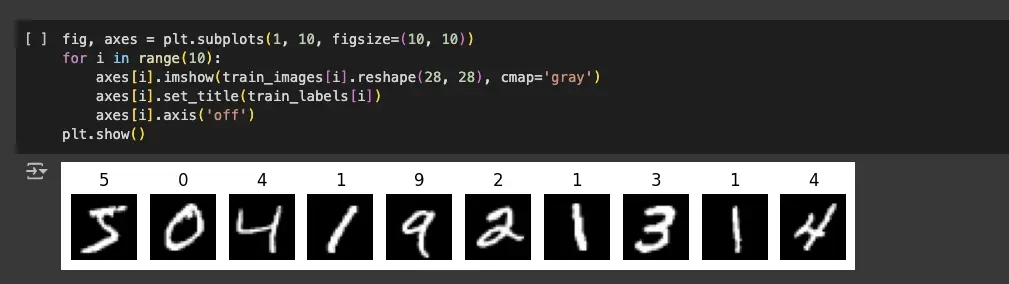

Before we proceed to the next step, let’s take a quick look at some sample images and their corresponding labels to understand what the model will be working with.

# Display the first 10 images and their labels

fig, axes = plt.subplots(1, 10, figsize=(10, 10))

for i in range(10):

axes[i].imshow(train_images[i], cmap=`gray')

axes[i].set_title(train_labels[i])

axes[i].axis('off')

plt.show()

This code displays the first 10 images from the training dataset along with their labels as show below:

Step 3: Build the Neural Network

Now that our dataset is ready, it’s time to build the neural network. We’ll use TensorFlow’s Sequential API to stack layers and define the structure of our neural network. Here’s the code:

model = models.Sequential([

layers.Input(shape=(28, 28, 1)), # Input layer: accepts 28x28 grayscale images

layers.Flatten(), # Converts 2D images into a 1D vector of 784 pixels

layers.Dense(128, activation='relu'), # First hidden layer with 128 neurons

layers.Dense(64, activation='relu'), # Second hidden layer with 64 neurons

layers.Dense(10, activation='softmax') # Output layer with 10 neurons (one for each digit)

])

Understanding the Layers

Input Layer (

Input): This layer specifies the shape of the input data: 28x28 pixels with 1 channel (grayscale). It doesn't process the data but ensures the network knows the format of incoming images.Flatten Layer: This layer converts the 28x28 grid of pixels into a single row of 784 numbers (1D array). This is necessary because the Dense (fully connected) layers only accept 1D data.

Dense Layers:

Hidden Layers: The first dense layer has 128 neurons, and the second has 64 neurons. Each neuron applies a mathematical operation to find patterns in the data. We use the ReLU (Rectified Linear Unit) activation function, which helps the model learn non-linear patterns.

Output Layer: This layer has 10 neurons, one for each possible digit (0–9). We use the softmax activation function, which outputs probabilities for each class (digit).

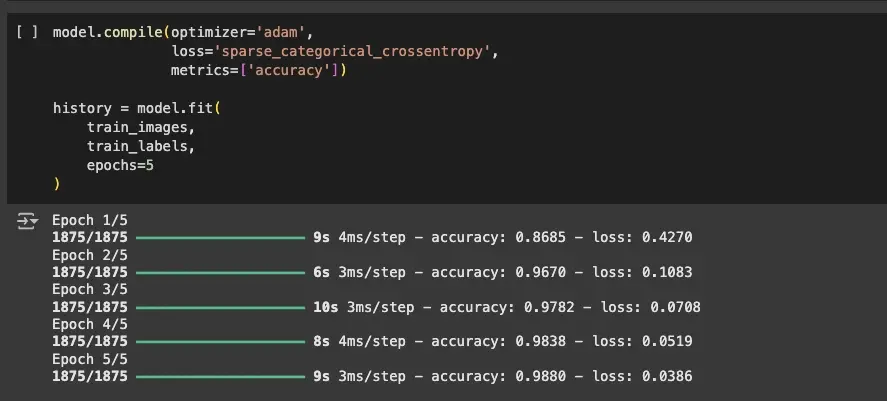

Step 4: Compile the Neural Network

Now that we’ve built the structure of the neural network, we need to compile it. Compiling is where we define how the model will learn, measure errors, and track performance during training.

Here’s how we compile the model:

model.compile(

optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy']

)

In the code above, optimizer='adam' automatically adjusts the learning rate during training, loss='sparse_categorical_crossentropy' is ideal for classification tasks with integer labels (e.g., 0–9), and metrics=['accuracy'] monitors the model's performance.

Step 5: Train the Neural Network

Now that our neural network is compiled, it’s time to train it. Training is the process where the model learns patterns from the dataset by adjusting its weights and biases over several iterations (epochs).

Here’s how to train the model using the training dataset:

history = model.fit(

train_images, # Input images

train_labels, # Correct labels

epochs=5, # Number of times the model will iterate over the entire dataset

)

train_images and train_labels provide the data the model uses to learn. The parameter epochs=5 specifies how many times the model will go through the entire training dataset, with each pass refining the model's understanding.

When you run the code, you’ll see output like this for each epoch:

In the output, loss represents the error on the training data, where lower values indicate better performance. Accuracy shows the percentage of correctly predicted images in the training data.

Step 6: Evaluate the Neural Network and Visualize Predictions

After training, we want to evaluate the model’s performance on the test dataset and visualize how well it predicts for individual test images.

Let’s create a function to display the test image alongside the model’s predicted probabilities for each digit.

def view_classify(image, probabilities):

fig, (ax1, ax2) = plt.subplots(figsize=(6, 9), ncols=2)

# Display the image

ax1.imshow(image.squeeze(), cmap='gray')

ax1.axis('off')

# Display the probabilities as a horizontal bar chart

ax2.barh(np.arange(10), probabilities)

ax2.set_aspect(0.1)

ax2.set_yticks(np.arange(10))

ax2.set_yticklabels(np.arange(10))

ax2.set_title('Class Probability')

ax2.set_xlim(0, 1.1)

plt.tight_layout()

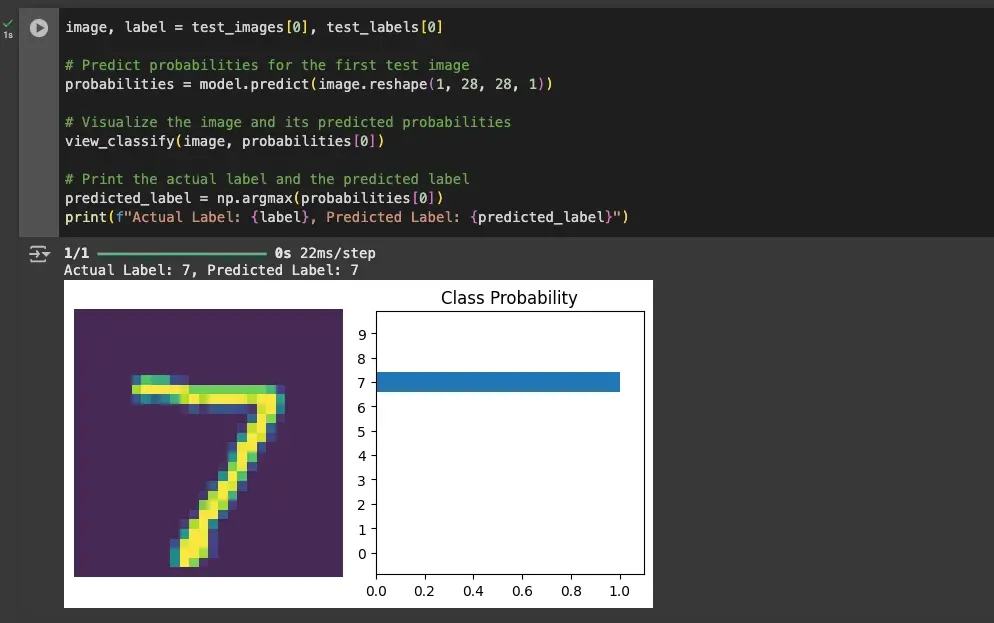

Now, let’s pass the first image from the test dataset through the model and visualize the result:

image, label = test_images[0], test_labels[0]

# Predict probabilities for the first test image

probabilities = model.predict(image.reshape(1, 28, 28, 1))

# Visualize the image and its predicted probabilities

view_classify(image, probabilities[0])

# Print the actual label and the predicted label

predicted_label = np.argmax(probabilities[0])

print(f"Actual Label: {label}, Predicted Label: {predicted_label}")

In the code above, image.reshape(1, 28, 28, 1) adjusts the image to match the input shape required by the model (batch size, height, width, channels). Meanwhile, np.argmax(probabilities[0]) identifies the digit with the highest predicted probability.

The output is as follows:

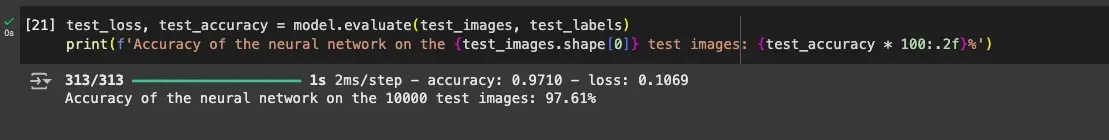

Finally, evaluate the overall accuracy of the model using all 10,000 test images.

test_loss, test_accuracy = model.evaluate(test_images, test_labels)

print(f"Test Accuracy: {test_accuracy * 100:.2f}%")

When you run this, you might see:

This indicates that the entire test dataset (313/313) was processed in 313 batches. A loss of 0.1069 suggests a relatively low error rate on the test dataset, meaning the model made few mistakes. An accuracy of 0.9710 shows that the model correctly classified 97.61% of the test images.

Conclusion

Congratulations! You’ve successfully built, trained, and evaluated your first neural network using TensorFlow.

Building your first neural network is an important milestone in your machine learning journey. As you continue exploring, you’ll gain deeper insights into how these models work and how to apply them to solve real-world problems. TensorFlow is a powerful tool, and now you’ve taken the first step toward mastering it.

Happy coding, and keep learning! 🚀

Frequently Asked Questions

What is Azure Machine Learning Studio?

Azure Machine Learning Studio is a fully managed cloud service that allows data scientists and machine learning engineers to easily build, train, and deploy machine learning models without needing to manage a computer cluster.

How does Azure Machine Learning Compute benefit data scientists?

Azure Machine Learning Compute provides scalable cloud resources for training machine learning models, enabling data scientists to run model training workflows and hyperparameter tuning efficiently.

Can Azure Machine Learning Designer handle deep learning tasks?

Yes, Azure Machine Learning Designer supports deep learning tasks, allowing users to design, train, and deploy complex models like object detection using a visual interface without writing code.

How do Azure Machine Learning engineers use Python SDK?

Azure Machine Learning engineers use the Python SDK to programmatically access Azure Machine Learning services, automate model training workflows, manage resources, and integrate with preferred tools and Azure DevOps for continuous integration and deployment.

Joel Olawanle is a Software Engineer and Technical Writer with over three years of experience helping companies communicate their products effectively through technical articles.

View all posts by Joel Olawanle