Facial emotion recognition is a fascinating and powerful application of computer vision and machine learning, offering insights into human emotions through advanced technology. We will guide you through creating a real-time facial emotion recognition system using Python and OpenCV.

This tutorial will demonstrate how an emotion recognition system is built to detect emotions in real-time from live video feeds.

Prerequisites

Before we start with the code, you must have the following prerequisites:

- Basic understanding of Python programming

- Familiarity with OpenCV for image and video processing

- Basic knowledge of machine learning concepts

Some packages that we will be using are as follows:

- OpenCV (cv2)

- FER (Facial Expression Recognition)

These can easily be installed by using the pip command.

pip install opencv-python

The command above, when executed, will install the OpenCV library for Python using the Python package manager, pip.

pip install fer

The command automatically installs the FER library for Python. The fer library detects facial expressions in both images and videos.

Setting Up the Environment

Let's start by setting up the environment: importing the necessary libraries, initializing the webcam, and the FER model.

import cv2

from fer import FER

# Initialize the webcam

cap = cv2.VideoCapture(0)

# Initialize the FER model

detector = FER()

Below is a code snippet that will be setting up an application for real-time facial emotion recognition through a webcam. It starts by importing the OpenCV library (cv2) and the FER (Facial Expression Recognition) library.

A webcam is initialized with cv2.VideoCapture(0), which will start capturing video from the default webcam, usually the built-in one on your laptop. After that, an instance of the FER model is initialized using detector = FER(), which will be used to analyze the video feed for real-time emotion detection on human faces recorded by the webcam.

Understanding the Code

Below steps and process below explain how the code works

Initializing the Webcam

The first step is to initialize the webcam. We use OpenCV's VideoCapture function to access the default webcam (indexed by 0). If you have multiple webcams, you can change the index to access a different one.

cap = cv2.VideoCapture(0)

The code cap = cv2.VideoCapture(0) uses OpenCV under Python to access a device for video capture. Here, cv2.VideoCapture(0) initializes an object named cap to capture the video from the default camera, which should be built-in if multiple cameras are attached to the system.

The parameter '0' sets the index number of the camera; if more than one camera was connected, then different numbers (1, 2, and so on) would correspond to those other cameras. Those frames can then be read by the camera through this object for further processing or display.

Initializing the FER Model

Next, we initialize the FER model. The FER library has a pre-trained model that can detect emotions from facial expressions.

detector = FER()

This code creates a FER instance from the fer package to analyze facial expressions in images or videos and detect emotions like happiness, sadness, and anger. The detector object is ready to process images and identify emotions for each detected face.

Capturing Frames

In the main loop, we capture frames from the webcam. The cap.read() function reads a frame from the webcam and returns two values: a boolean ret indicating whether the frame was read successfully, and the frame itself.

while True:

ret, frame = cap.read()

if not ret:

print("Can't receive frame (stream end?). Exiting ...")

break

This code continuously reads frames from a video capture object (cap) in an infinite loop. If a frame is successfully read (ret is True), the loop continues to process the frame. If the frame cannot be retrieved (ret is False), indicating the end of the video stream or an error, it prints an error message and exits the loop.

Emotion Detection

Given a frame, we detect emotions using the FER model. The function detect_emotions returns a list of dictionaries, where each contains the bounding box of a face along with the corresponding emotions with their respective scores.

# Detect emotions in the frame

faces = detector.detect_emotions(frame)

The code above uses the detector object to analyze the frame for facial expressions, detecting and returning a list of emotions for each face identified in the frame. The detect_emotions method processes the image data to determine the emotions being expressed by any faces present.

Displaying Results

For each detected face, we draw a rectangle around the face and display the emotion with the highest score. We use OpenCV's rectangle and putText functions for this purpose.

# Drawing rectangles around faces, and displaying emotions

for face in faces:

x, y, w, h = face["box"]

# getting the emotion with the max score

emotion, score = max(face["emotions"].items(), key=lambda item: item[1])

# drawing rectangle around the face

cv2.rectangle(frame, (x, y), (x + w, y + h), (255, 0, 0), 2)

# displaying the label at the top of the bounding box

cv2.putText(frame, f"{emotion}: {score:.2f}", (x, y - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.9, (255, 0, 0), 2)

The above code iterates over detected faces extracts their coordinates and dimensions, and determines the emotion with the highest score from the face's emotion data. It then draws a red rectangle around each face on the image frame and displays the most likely emotion along with its score above the rectangle.

Finally, we display the resulting frame using cv2.imshow. loop runs until the user presses the 'q' key.

# Display the output frame

cv2.imshow('Facial Emotion Recognition', frame)

# breaking the loop

if cv2.waitKey(1) == ord('q'):

break

# release webcam and destroy windows

cap.release()

cv2.destroyAllWindows()

This code snippet displays the current frame from a video feed in a window titled 'Facial Emotion Recognition' using OpenCV's imshow function. It then waits for a key press; if the 'q' key is pressed, the loop terminates. After exiting the loop, the code releases the webcam and closes all OpenCV windows to clean up resources.

Running the Code

To run the above code, you have to execute the Python script. If everything is set up correctly, a window will open displaying the webcam feed with rectangles around detected faces and labels indicating the recognized emotions.

python facial_emotion_recognition.py

The above command runs a Python script named facial_emotion_recognition.py, which analyzes facial expressions in images or videos to detect emotions.

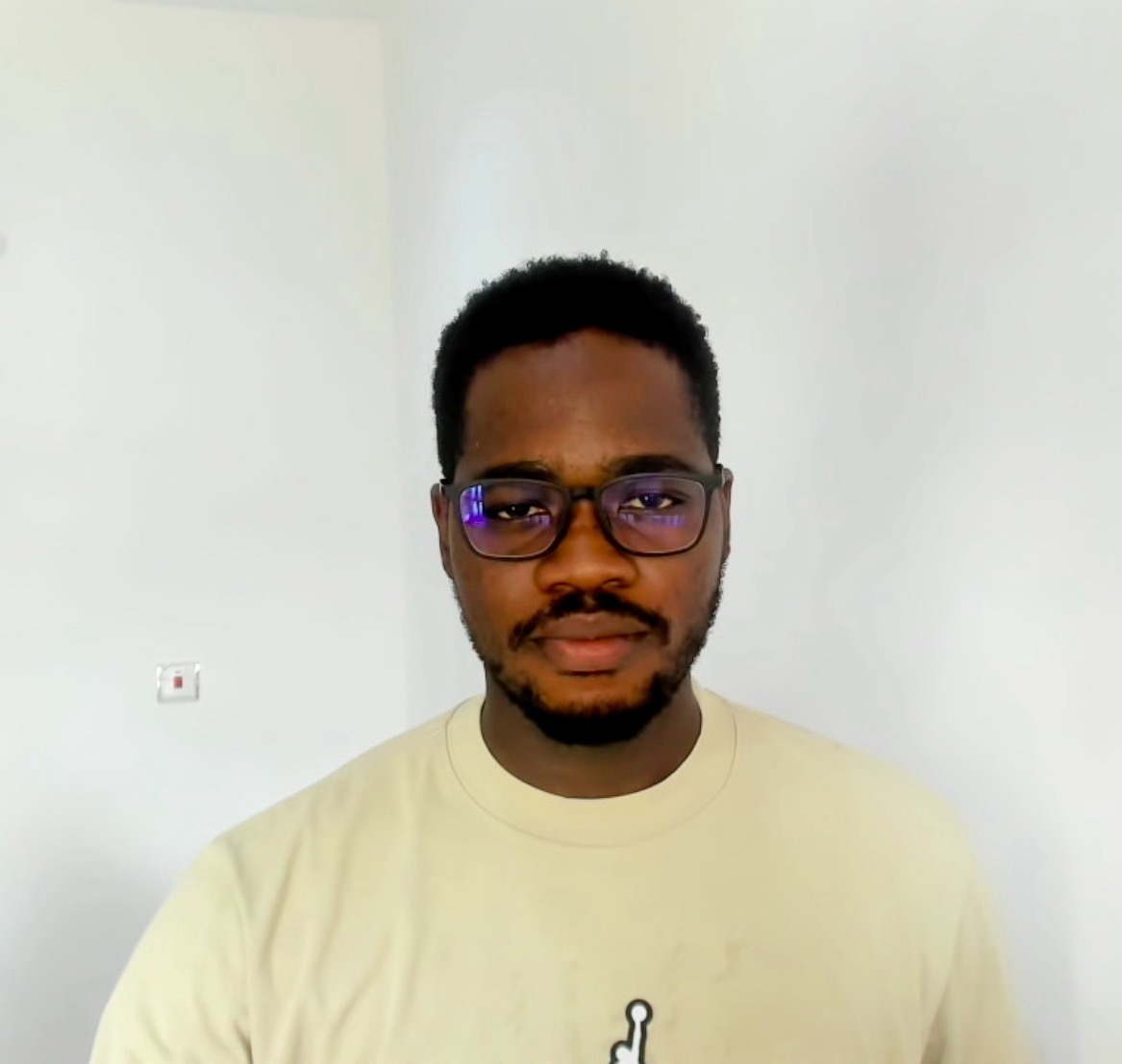

Below is the output of the above code

Customizing the Application

The process below customizes the frame of the video captures

Adjusting Camera Settings

You can adjust the camera settings, such as resolution and frame rate, using the set method of the VideoCapture object. For instance, to set it to 640x480 resolution:

Make sure you add the code at the start of your code after importing the required libraries and initializing the webcam.

import cv2

from deepface import DeepFace

# Initialize the webcam

cap = cv2.VideoCapture(0)

# Define the desired output window size

output_width = 640 # Width of the window frame

output_height = 480 # Height of the window frame

This code sets the width and height of the video capture frame to 640x480 pixels.

Below is the output after reducing the frame size:

Modifying Emotion Detection Parameters

The FER library allows parameterization, which ranges from modifying the detection threshold. Please refer to the FER documentation for details on the supported parameters and how to set them.

Improving the Output

You could enhance the display by overlaying information like the time-stamp or other graphics. OpenCV provides functions to draw a wide variety of shapes and add text to images. For example, the following can be done by including it in the displayed frame:

import datetime

# Get the current date and time

# Add date and time to the frame

timestamp = datetime.now().strftime('%Y-%m-%d %H:%M:%S')

cv2.putText(frame, timestamp, (10, output_height - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 255, 0), 2)

This code imports the datetime module and gets the current date and time formatted as a string. It then uses OpenCV's putText function to overlay this timestamp onto a frame at the specified position, font, size, and color.

Below is the final code and the output after adding the time stamp to the camera frame, showing in green color font on the frame.

import cv2

from deepface import DeepFace

from datetime import datetime

# Initialize the webcam

cap = cv2.VideoCapture(0)

# Define the desired output window size

output_width = 640 # Width of the window frame

output_height = 480 # Height of the window frame

# Define a confidence threshold for emotion detection

confidence_threshold = 0.5

while True:

# Capture frame-by-frame

ret, frame = cap.read()

if not ret:

print("Can't receive frame (stream end?). Exiting ...")

break

# Analyze the frame for emotion using a higher resolution for accuracy

try:

result = DeepFace.analyze(frame, actions=['emotion'], enforce_detection=False, align=True)

except Exception as e:

print(f"Error: {e}")

continue

if result:

for face in result:

emotion = face['dominant_emotion']

score = face['emotion'][emotion]

if score >= confidence_threshold: # Only display emotions with high confidence

x, y, w, h = face['region']['x'], face['region']['y'], face['region']['w'], face['region']['h']

# Draw a rectangle around the face

cv2.rectangle(frame, (x, y), (x + w, y + h), (255, 0, 0), 2)

# Display the emotion label with a confidence score

cv2.putText(frame, f"{emotion}: {score:.2f}", (x, y - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.9, (255, 0, 0), 2)

# Add date and time to the frame

timestamp = datetime.now().strftime('%Y-%m-%d %H:%M:%S')

cv2.putText(frame, timestamp, (10, output_height - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 255, 0), 2)

# Resize the frame to the desired output size

resized_frame = cv2.resize(frame, (output_width, output_height))

# Display the resulting frame

cv2.imshow('Facial Emotion Recognition', resized_frame)

# Break the loop on the 'q' key press

if cv2.waitKey(1) == ord('q'):

break

# Release the webcam and close the windows

cap.release()

cv2.destroyAllWindows()

The final Output:

Troubleshooting

In case you realize that it does not work or you cannot run the code, please try a few steps listed below that will help with troubleshooting:

- Check if your camera is connected and working.

- Install the necessary packages: opencv-python, fer.

- After this is run, the console will display some error messages. Review these and, for a better understanding of all these libraries, refer to their respective documentation.

Other common issues include missing/incorrect dependencies, webcam problems, and parameter setting issues.

Conclusion

In this tutorial, we developed a real-time facial emotion recognition system using Python. We utilized OpenCV for handling webcam inputs and the FER library for detecting and recognizing emotions.

By following this tutorial, you can build and design your very own emotion recognition application for all sorts of different use cases.

Frequently Asked Questions

How do machine learning algorithms improve facial recognition applications?

Machine learning algorithms improve facial recognition by enhancing the accuracy and efficiency of recognizing and verifying faces. These algorithms use neural networks to process and analyze facial features from images and videos, learning from data collection to accurately identify individuals even under varying conditions.

How secure is facial recognition compared to other biometric methods?

Facial recognition is generally secure, but its effectiveness depends on the system's sophistication. While it may be susceptible to certain types of spoofing, advancements in technology, including 3D facial mapping, have significantly improved its security.

How does image recognition work using deep learning techniques?

Image recognition works by using deep learning techniques to automatically identify objects within digital images. Deep learning models, specifically deep neural networks, extract hierarchical features from images, which helps the system understand images much like human vision. This process involves training the model on large datasets to recognize various objects and scenes.

How are deep learning algorithms applied in image recognition online applications?

Deep learning algorithms are applied in image recognition online applications to efficiently and scalably handle various image recognition tasks. These applications use popular deep learning models to extract features and achieve image recognition, supporting tasks such as face recognition, picture recognition, and more. These models are trained to process large volumes of images and videos, ensuring high performance and adaptability to new data.

Joel Olawanle is a Software Engineer and Technical Writer with over three years of experience helping companies communicate their products effectively through technical articles.

View all posts by Joel Olawanle